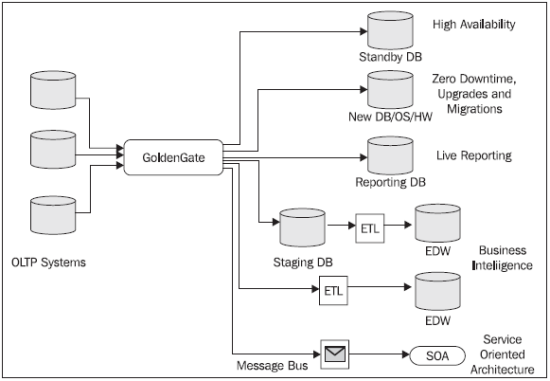

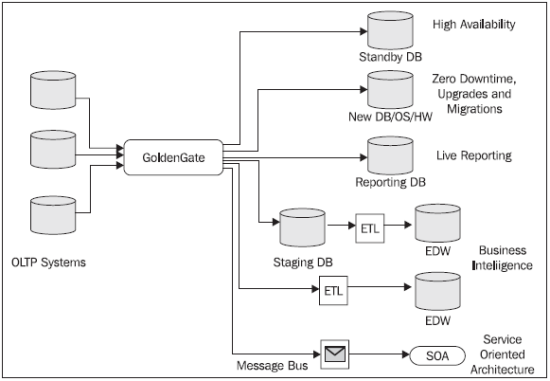

GoldenGate is a tool that Oracle acquired in 2009 that allows us to capture, route, transform and send data between heterogeneous systems in real time with a very low impact on the source systems.

So basically Oracle GoldenGate (OGG from now on) moves data between point A and point B, but it does it in a brilliant way. Let’s take a look in detail to its main features:

Very low impact on data sources – This means that OGG does not disturb the source systems when fetching new data. In order to achieve that it doesn’t really access the database layer but it’s constantly looking for change in the redo files (or equivalent technology) to know when a transaction has been done.

Heterogeneous Systems – Thanks to the ODBC technology and the VAM (Vendor Access Modules) OGG is able to interconnect a nice amount of database vendors between each other, although only the ones that offers referential integrity can be chosen as source systems.

Real time – Maybe you’ve heard that OGG can transfer data with a sub second latency. That is true if your integration scenario meets some conditions and the physical distance between source and destination is not huge. Anyway, in the worst circumstances we are talking about real low latency times.

15 November, 2011