01 Feb 2010 Informatica OBI Applications ETL: the Slowly Changing Dimensions logic

One of the greatest advantages of buying an OBI Application – Project, Supply Chain or any other of the many Analytics flavours – is the set of predefined ETL mappings, sessions and workflows that come with it.

Although there is a good chance that the OLTP data source is highly customised, the online Oracle documentation is full of information that can make the ETL developer’s life easier. That said, there are some important ETL tasks whose logic isn’t very easy to find. They are like black boxes: you customise them a little bit – some fields behind the X_CUSTOM placeholder here, a small datatype change in the target table there – and they operate their magic.

So let’s reveal the truth behind the veil of a very important out of the box ETL logic in OBI Apps: the Slowly Changing Dimension management mappings.

(NOTE: The rest of this article is based on the assumption that you already know what a Slowly Changing Dimension (SCD) is and all its different types.)

Starting slow: the general structure

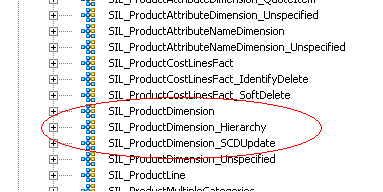

Recognising dimensions that Oracle decided should be SCDs in the OOTB Informatica repository is easy. In the SILOS folder, which contains all Source Independent Load mappings (i.e. loading from the Staging Area to the Reporting tables), you will find a mapping for each of these dimensions named like this:

SIL_<entity>Dimension_SCDUpdate.

The structure of an SCD extract, transformation and load is essentially managed by three mappings:

SDE_<entity>Dimension

SIL_<entity>Dimension

SIL_<entity>Dimension_SCDUpdate

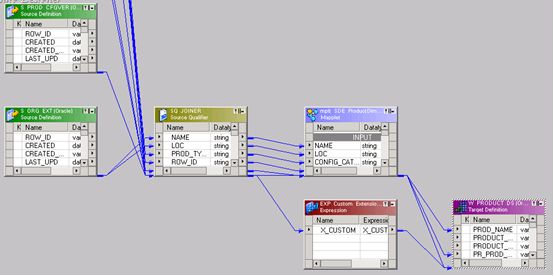

The first mapping is available in an Adapter folder, related to the data source you are using to feed your precious data warehouse, and looks like Figure 2.

It basically joins together the main OLTP table with auxiliary ones to extract data to send to the staging table (which usually take the name of W_<entity>_DS).

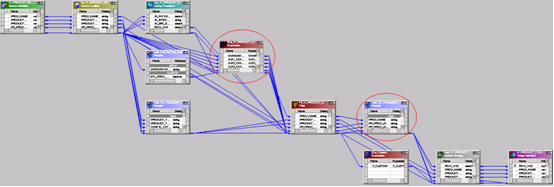

The second mapping is in SILOS, and manages the insert and update of the rows coming from the staging tables into the final reporting table (usually called W_<entity>_D). Dealing with surrogate keys and lookups is likely to be more complex than the SDE.

The third mapping is in SILOS as well, and its only task is to update the effective dates and a set of column values in the SCD. The effect range – only the most recent records or the whole history – depends on specific variable settings.

Going deep: transformations, variables, lookups…

With regards to managing SCD Type II, the OOTB Oracle approach is pretty standard. Two date fields are used to keep track of history (EFFECTIVE_FROM_DT, EFFECTIVE_TO_DT) and a flag determines the most current version of a specific record (CURRENT_FLG).

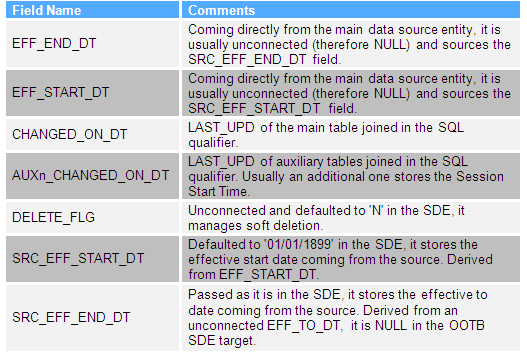

These fields are set using a series of other auxiliary fields, which are managed throughout the execution of the three mappings.

The SDE mapping logs the EFFECTIVE_FROM and TO dates coming straight from the data source (if available) and keeps track of the last updated date on the main entity, plus all relevant auxiliary tables. This information will then be used to evaluate if a specific row is eligible for Type II update or not.

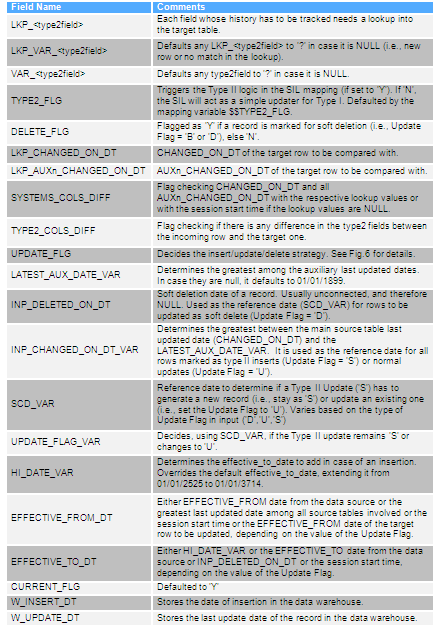

The SIL mapping contains the bulk of the logic to update the Type II fields and populate the effective dates.

The update strategy is decided based on the following factors:

- Is it a new row? (i.e. does the lookup into the target table retrieve anything?)

- Have any of the Type II fields changed?

- Are any of the last updated dates on the incoming row greater than the ones on the existing row?

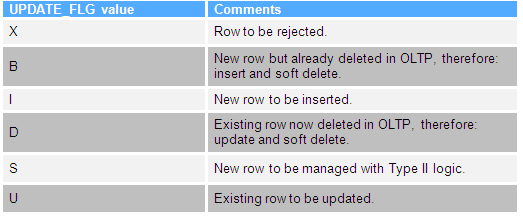

Depending on these conditions, the UPDATE_FLG field will provide the update logic as per Figure 7.

UPDATE_FLG decides the surrogate primary key population as well: if it is a row to be inserted, then takes the current value of a sequence generator, else uses the key of the record to update.

The final value of the EFFECTIVE_FROM_DT is chosen among the auxiliary fields depending on the value of the Update Flag as follows:

- If the record is to be inserted (UPDATE_FLG = ‘I’ or ‘B’), it returns the EFFECTIVE_FROM date from the data source. If it is null, then defaults it to 01/01/1899.

- If the record is a Type II change (‘S’), it returns the greatest last updated date among all source tables involved. If it is null, then returns the session start time.

- If the record is a simple update (‘U’), it returns the EFFECTIVE_FROM date of the target row to be updated.

- In all remaining cases (Update Flag is null or ‘D’), returns NULL.

EFFECTIVE_TO_DT is calculated as follows:

- If the record is a new row not marked for soft deletion (UPDATE_FLG =’I’ or ‘S’), then returns the HI_DATE_VAR (either the non-defaulted EFFECTIVE_TO date from the data source, or 01/01/3714).

- If the record is a new row marked for soft deletion (‘B’), returns either the non-defaulted EFFECTIVE_TO date from the data source or the first not null between the INP_DELETED_ON_DT (which anyway is in almost all cases NULL) and the session start time.

- If the record is a simple update, then returns the HI_DATE_VAR, unless the rare case of the EFFECTIVE_FROM date in input is greater than the HI_DATE_VAR itself, in which case it returns the original EFFECTIVE_TO date from the data source.

Finally, the SIL mapping decides the update strategy of the target reporting table as follows:

- If the record is a new one (UPDATE_FLG either ‘I’,’B’ or ‘S’) then Insert, else (UPDATE_FLG = ‘U’ or ‘D’) Update.

Clear as mud? In the likely case that the aforementioned pieces of logic are a bit cryptic, my suggestion is to have a thorough look at the variables/fields and write down an example.

The final stroke: updating the dates

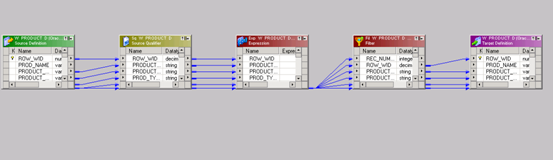

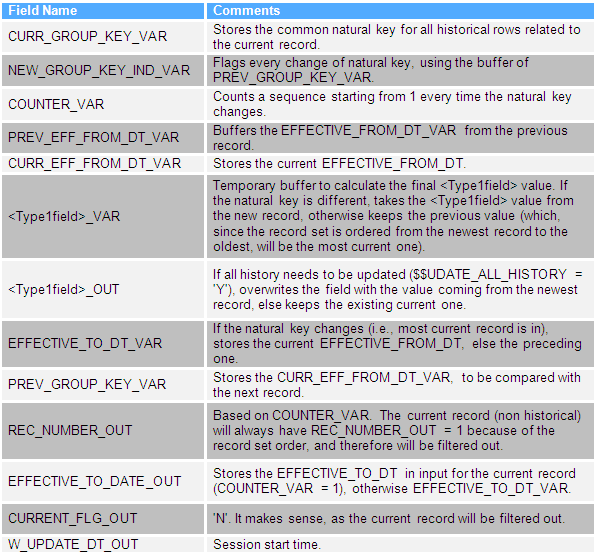

The SCDUpdate mapping, as stated before, takes care of updating the historical records already present in the target table.

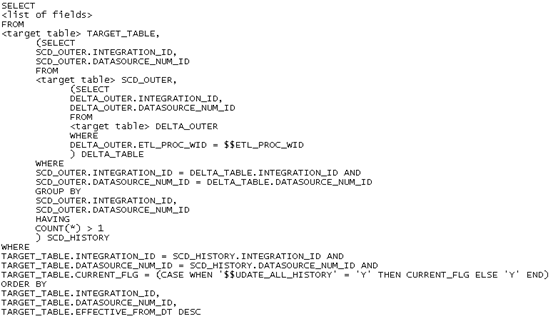

The SQL Qualifier transformation contains a SQL override structured as follows:

Figure 8: The SQL override in the SCDUpdate mapping. For all those who always dreamt of becoming a DBA.

The DELTA_TABLE sub-query does a first cut on the rows to retrieve, selecting only the target records that have been processed in the preceeding load. The outer sub-query, SCD_HISTORY, retrieves only records that have been historically tracked at least once.

Finally, the whole query checks the $$UDATE_ALL_HISTORY (sic!)parameter and defines if it has to consider all historical records or only the most current ones. In other words, if the parameter is set to ‘Y’, then each Type 1 change will be propagated across all records related to a specific natural key, otherwise only the records flagged as current.

Note how the recordset is ordered by EFFECTIVE_FROM date in decreasing order, which means that the most current records will be first for each natural key.

So, that’s basically it. Complex? Sure is. Improvable? Possibly.

You will notice a few little differences depending on the entity – all examples here have been derived from the Product SCD Dimension in SILOS – but the general strategy remains the same.

Will this change with the arrival of Oracle 11g? Only time will tell…