07 May 2025 Automating Data Ingestion from An External API with Snowflake

Integrating external data sources via APIs is a common requirement for modern data warehouses. Traditionally, this process depended on external tools or scripts to fetch and load data, adding complexity and potential points of failure. However, Snowflake now offers a way to streamline this ingestion process entirely within its own environment using only native components, simplifying data retrieval and also enhancing logging and automation capabilities. In this article, we’ll outline the necessary steps to configure Snowflake for external API data ingestion.

Before getting started, you’ll need:

- A Snowflake account with the appropriate user privileges.

- Access to the API you intend to ingest data from.

- Snowflake Snowpark with the Python runtime enabled.

Now you’re ready to start integrating API data into your Snowflake environment!

Key Components

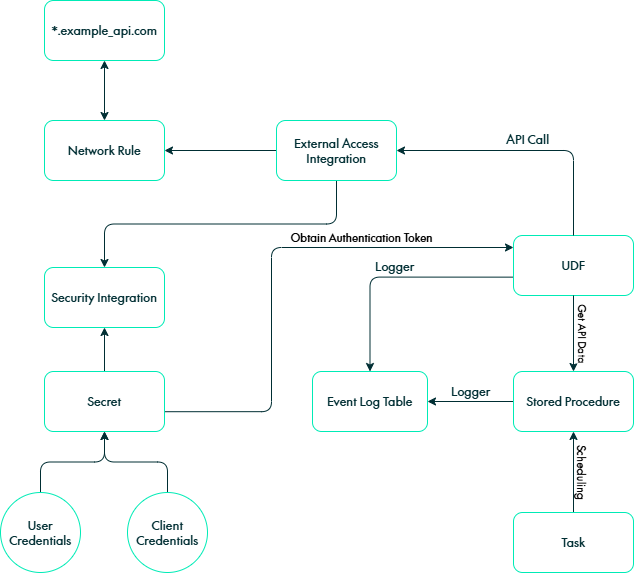

The main Snowflake components involved are:

- User-Defined Function (UDF): Handles API calls, processes responses, and returns structured data.

- Stored Procedure: Manages the ingestion process, including logging and error handling.

- Task: Automates the execution of the stored procedure on a scheduled basis.

- Event Table: Stores and tracks changes, providing an audit trail for data ingestion processes.

Together, these components create a reliable, automated workflow to integrate API data into Snowflake whilst also ensuring stability and traceability.

Figure 1: Diagram of the relevant components for external API ingestion

Configuring Snowflake for External API Access

Several key configurations must be implemented to enable Snowflake to communicate with a third-party API and to guarantee a secure, efficient, and scalable integration process, allowing Snowflake to retrieve and process data without any glitches. For the purposes of this article, we’ll walk through ingesting data from the Microsoft Graph API.

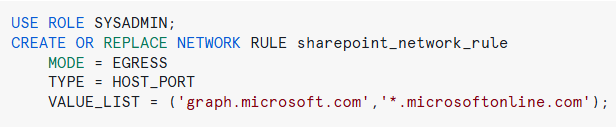

Define A Network Rule

The first step is to establish a network rule to permit outbound connections from Snowflake to the target API by ensuring that Snowflake has the necessary permissions to communicate with the external service and fetch data as needed. Without this setup, Snowflake wouldn’t be able to establish a direct connection to the API:

Figure 2: Creating the network rule

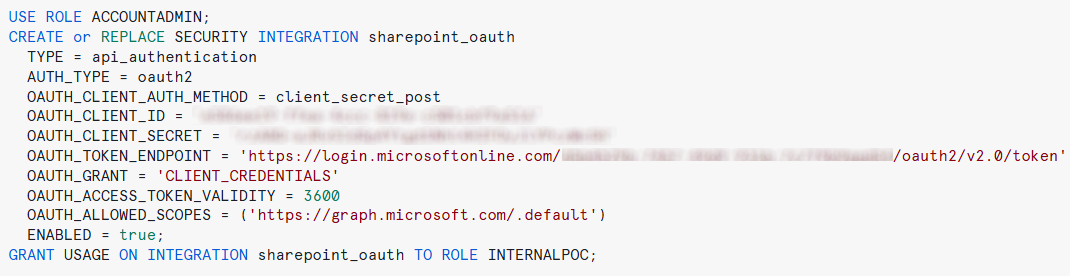

Set Up A Security Integration

The next step is to configure a security integration to manage the authentication flow required by the API. Different APIs use different authentication mechanisms, and in our example, we are working with an API that uses OAuth client credentials. To authenticate successfully, we need to provide the following details:

- Client ID: A unique identifier assigned to the application accessing the API.

- Client Secret: A confidential key used to authenticate the application.

- Token Endpoint: The URL where Snowflake requests authentication tokens.

- List of Scopes: The permissions granted to Snowflake for accessing specific resources within the API.

Setting up this integration ensures that Snowflake can securely request and manage API authentication tokens without the need for manual intervention:

Figure 3: Creating the Security Integration

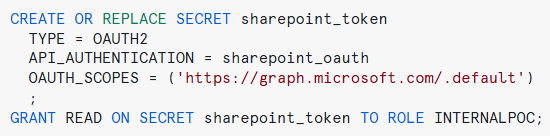

Configure Secure Token Management

Once the authentication mechanism is in place, we need a secure method to store and retrieve the authentication token obtained from the API. This is where Snowflake Secrets come into play. With Secrets, we can securely store sensitive credentials and tokens within Snowflake’s infrastructure, minimising the risk of exposure or mismanagement, which is crucial in maintaining security and compliance whilst automating API data ingestion:

Figure 4: Creating a Snowflake Secret

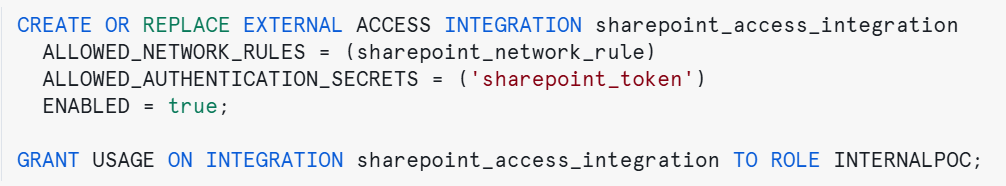

Establish An External Integration

Finally, we need to set up an external integration to enable Snowflake to retrieve data from external endpoints. This integration allows Snowflake to call the API, extract relevant datasets, and then store them for further analysis:

Figure 5: Creating an external integration

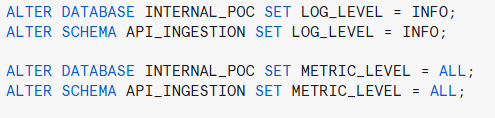

Setting Up Snowflake to Register Logs and Traces

To effectively track logs and traces throughout the entire process, we first need to configure Snowflake to register these events in the event table. This involves specifying the level of detail required for logging, ranging from basic informational messages to detailed debugging traces. By defining these parameters, we ensure that the ingestion pipeline remains transparent and can be monitored for errors, performance bottlenecks, and anomalies.

Once configured, log events must be added into the UDF and stored procedure to capture key execution details. If a high level of tracing is enabled, some events will be tracked automatically by Snowflake:

Figure 6: Enabling traces

Figure 7: Enabling logs and metric capture

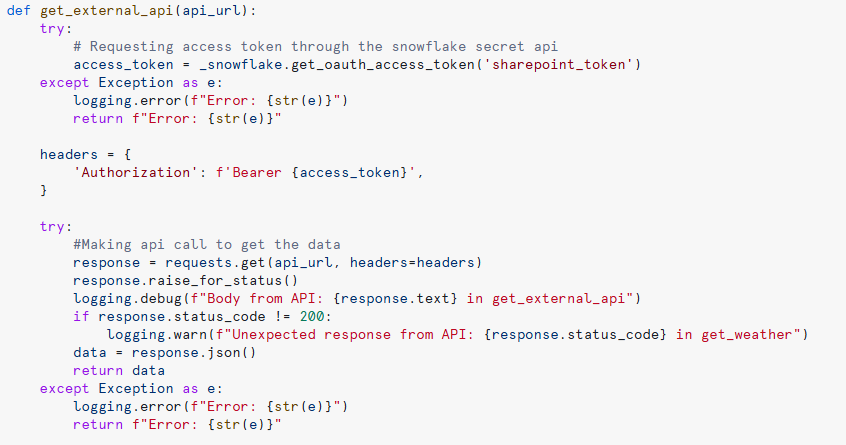

Calling An API from A Snowflake UDF

With the external connection established and logging enabled, the next step is to create a UDF to call the API and retrieve data. Within the UDF code, it’s important to use the Snowflake Secret Management API to handle authentication securely. This ensures that the authentication token is retrieved dynamically and included in the API request headers, allowing smooth, secure data access:

Figure 8: Snowflake UDF to make a simple API call

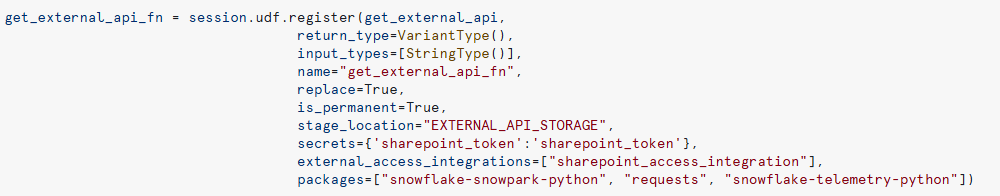

Be sure to include the secret and external access integration in your UDF parameters to guarantee proper external access and authentication:

Figure 9: Registering the UDF

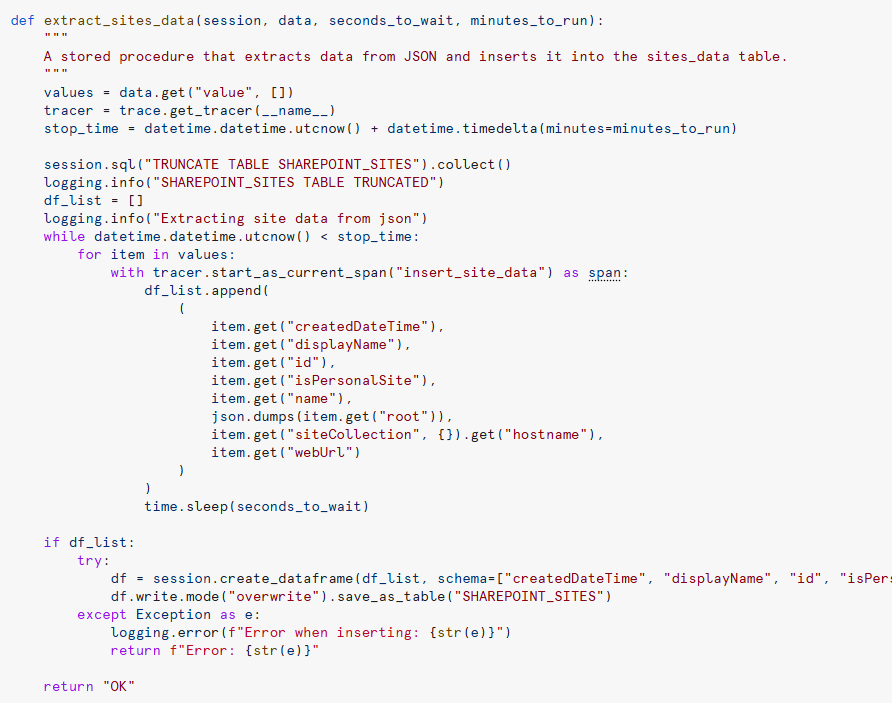

Ingesting API Data into Snowflake with A Procedure

Once the data has been retrieved, the next step is to process it then store it in a table for further exploration and analysis. This is done with a stored procedure, which takes the UDF result as input, transforms it, then inserts it into the target table. The stored procedure ensures data consistency, handles potential errors, and logs relevant events, making the ingestion process both efficient and reliable.

Remember that this process should be tailored to accommodate the structure and format of the response from each API endpoint:

Figure 10: Stored procedure to process the API data

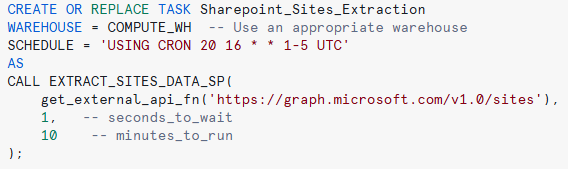

Orchestrating Everything with Snowflake Tasks

To schedule this process so that everything runs smoothly and data is ingested on time, there are Snowflake Tasks to orchestrate the workflow:

Figure 11: Task creation

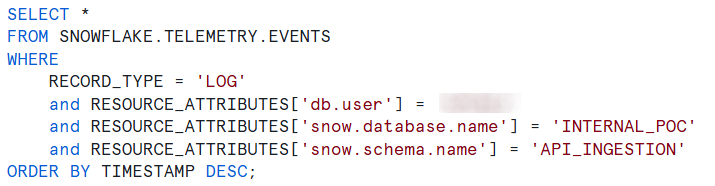

Logs

To monitor the events that occur during task execution, you can review all the logs inserted in the event table during the execution of the stored procedure and UDF. To do so, run a query like this:

Figure 12: Querying log data

Conclusion

By automating API data extraction with a well-structured function, you enable Snowflake to ingest external data seamlessly without relying on additional ETL tools. This approach enhances efficiency, security, and scalability, allowing your organisation to integrate third-party data sources effortlessly.

Ready to streamline your data ingestion? Here at ClearPeaks, we specialise in implementing scalable and secure Snowflake solutions adapted specifically to your needs. Contact us today to discover how we can help you to unlock the full potential of your data in Snowflake!