29 Jun 2011 Data Change Capture Process: Full Load vs Incremental Load

In today’s business world, quick access to data is key in making the right decisions at the right time.

This article, based on a real case, highlights the importance of using an incremental load using the change data capture technique for loading data to the dashboards for the end users.

Scenario

This scenario is located in a telecommunications company, where their requirement is to have dashboards showing the daily and updated data of the marketing and financial department.

In order to accomplish this, an ETL process has been developed that loads the data of the different sources to the KPI’s database.

The different data sources are:

· Siebel RCM: This contains the transactions for the services and products contracted by the customers.

· Oracle JD Edwards: This contains the incoming revenue transactions derived from the services and products contracted by the customers and the supplier payment transactions.

The dashboards are related to the:

· CRM area

· Financial area

The objectives of this solution regarding these requirements are:

· The information of these dashboards should always be updated

· The ETL process should not take much time

· The data loaded has to reflect that of the data sources

· The ETL process should be maintainable

· The information of these dashboards should always be available

The solution: Initial full load and incremental load (CDC)

The solution applied was:

- An initial full load of the data

- Incremental load: applying ongoing changes and keeping historical data on a predefined schedule.

In this case the solution is to apply an incremental load instead of full load (truncate / insert) for the following reasons:

· Information must be available 24 hours x 7 days

The truncate/insert could have a consequence that the information will be not be available for a certain time period.

· The changes of the data only represents 10% total rows

The change in the data capture process doesn’t take much time as the changes only represent part of the data.

· It avoids moving all data every time the process is executed

The process will be faster as it doesn’t need to load all data every time.

· It should manage only the changes of the last 3 months

The change data capture process doesn’t take much time as the process only checks part of the data and not all of it.

Moving to incremental load strategy will require a previous analysis:

· Determine which changes to capture:

In this case the data of the tables from the data sources have modifications every day related to the previous day, which is why it has to determine which changes the process has to capture in order to have the data updated every day.

The change that has to be captured is the value column of the fact table. It has to compare the value of the incoming row with the value of the existing row.

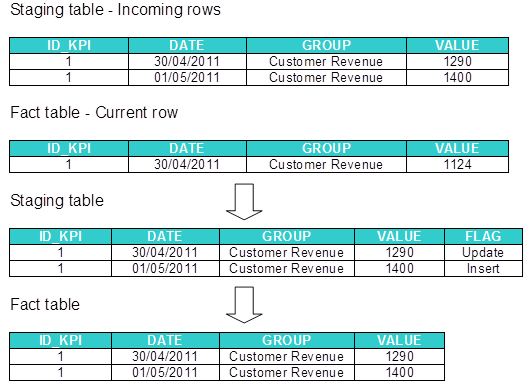

Find an example below:

· Design a method to identify changes.

The method in this case is identified if the incoming row already exists in the database; if it exists update the value for the value of the incoming row.

It compares the values of the columns of the primary key or unique key of the fact table with the incoming data.

· Determine which changes should be updates and which should be inserts

Taking into account the result of the comparison of the columns of the primary key or unique key of the rows, it will label the row with a flag.

> If not exist -> FLAG=’Insert’

> If exists -> FLAG=’Update’

· Take a look at the time stamping of the rows where you want to do the changes

Regarding the requirements and the analysis of the data sources, the modifications can be done up to a maximum of the last 3 months. The process checks if there are changes of the data of the last 3 months avoiding having to check all of the data.

The change data capture process will only check changes in the fact table where the data of the incoming row is after the last 3 months of last time date captured

This picture illustrates the diagram:

This is an example of the process:

In conclusion we can deduce that incremental loading is more efficient than full reloading unless the operational data sources happen to change dramatically. Thus, incremental loading is generally preferable.

However, the development of ETL jobs for incremental loading is ill-supported by existing ETL tools. In fact, currently separate ETL jobs for initial loading and incremental loading have to be created by ETL programmers. Since incremental load jobs are considerably more complex their development is more costly and error-prone.

To overcome this obstacle in this scenario we proposed the Change Data Capture (CDC) technique.