24 Apr 2024 Real-Time Data Monitoring Using Scalable Distributed Systems

Companies nowadays must process vast quantities of data using Big Data technologies in tight timeframes. In addition to its enormous volume, this data must be processed in real time, so the architecture must be flexible, distributed, and scalable. This scenario is particularly relevant to the logistics sector, for example, where IoT (Internet of Things) devices monitor a series of indicators to help companies to assess drivers’ behaviour for subsequent processing and analysis. In this blog post, we’ll explore some modern software solutions that allow businesses to design distributed and scalable systems that perform ETL (Extract, Transform, Load) operations in real time.

Scalable and Distributed Processing

A common bottleneck in data processing is the ability of systems to ingest, transform, and deliver raw data, subsequently used to offer powerful insights into key aspects of the business. This bottleneck can be resolved by enhancing scalability (adding additional processing power) and distribution (spreading the data processing tasks across multiple systems), and a system that is both scalable and distributed can handle load balancing and enhance fault tolerance.

Kappa architectures are one of the software solutions that meet these needs and challenges within the Big Data sector. They consist of two layers, the first to process the data flow introduced into the system and the second, a service layer, to process data in real time and present the end user with the results of all this processing.

By definition, Kappa architectures are:

- Distributed: A distributed system is one in which data and processing tasks are spread across multiple machines or nodes within a network. A distributed system offers benefits such as:

- Fault tolerance: The ability of a system to operate reliably without interruption even if one of its parts fails.

- Load balancing: The capability of efficiently distributing the load of a task.

- Parallel processing: Tasks can be executed across multiple parts of the system, leading to faster data processing.

- Scalable: A system’s ability to manage growing data volumes and increasing workflows. As data or processing requirements increase, the system can adapt and expand its resources to meet these new demands.

The use of a data processing architecture that implements the benefits presented in this blog post can already be seen in industry. For example, the transportation sector has benefited from employing this architecture to address its specific needs and challenges.

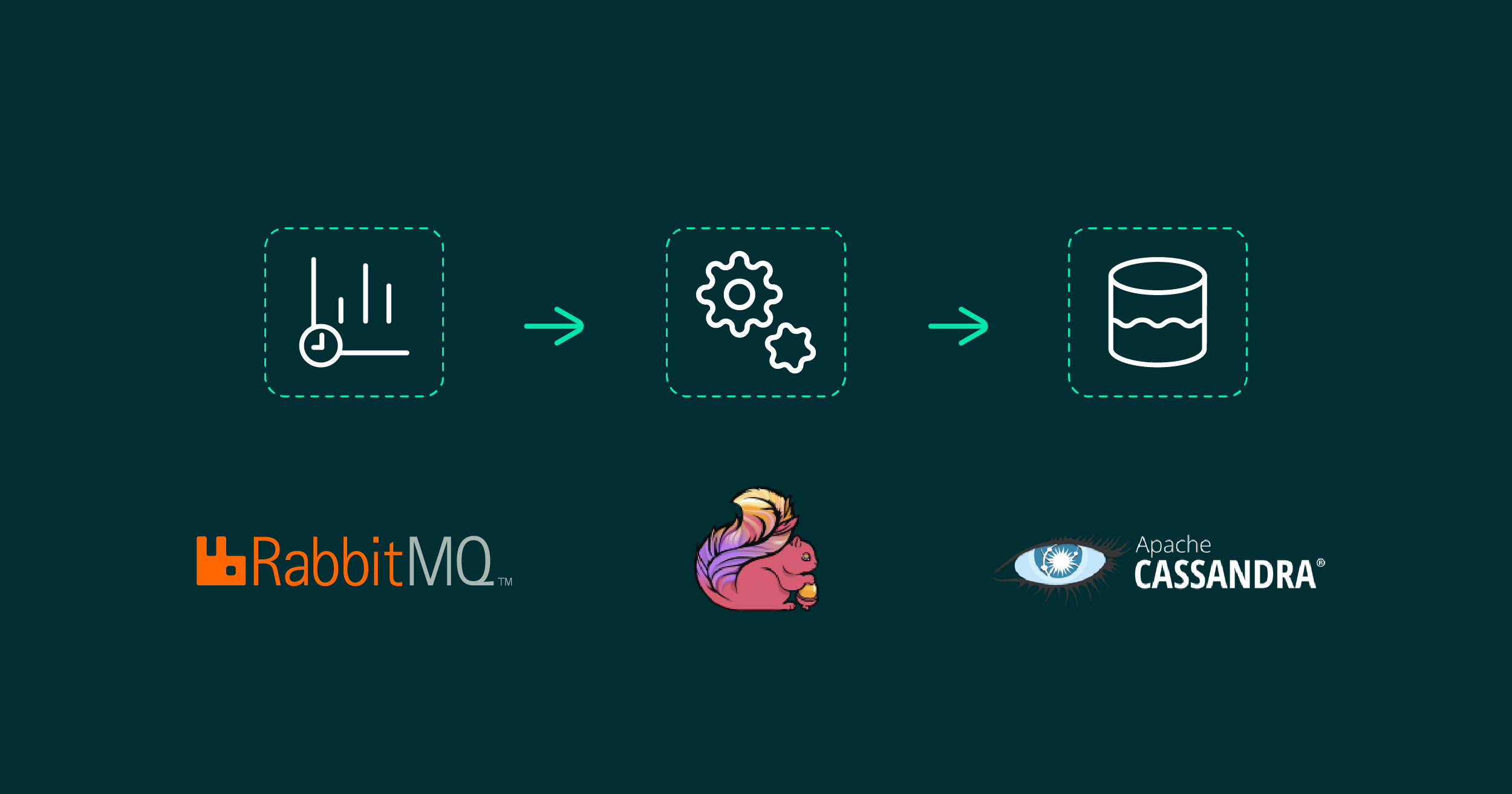

Today we’ll explore an architectural solution to this problem that can enhance data processing for businesses, and review three open-source technologies that can be integrated to achieve this improvement.

RabbitMQ

RabbitMQ is an open-source messaging software that can be used as a real-time data queue. It was launched in 2007 and is continuously developed and updated by Pivotal Software. Operating as a messaging broker, RabbitMQ relays messages from a source to a processing machine reliably and efficiently. It can connect to various information sources, for example an IoT device, which are typically small sensors like accelerometers or pressure sensors that can send large amounts of raw data.

RabbitMQ is scalable thanks to its ability to form clusters, joining multiple servers on a local network. These clusters work collectively to optimise load balancing and fault tolerance.

It also offers the added benefit of being compatible with multiple platforms and programming languages. For instance, users proficient in Python can use the Pika library to create messaging queues that enable RabbitMQ to route messages across different systems.

Apache Flink

Apache Flink is an open-source software that provides libraries and a distributed processing engine, written in Java and Scala, for processing data streams. Flink is adept at handling large volumes of data, whether in batches or as a continuous stream, and can be configured as a cluster of processing units. Flink applications are fault-tolerant and offer the possibility of establishing a quality of service.

Flink can easily be configured to connect with messaging software like RabbitMQ and other information sources, enabling simultaneous data processing from multiple sources. What’s more, Flink facilitates the extraction of information from raw data through ETL techniques, subsequently outputting the results to other messaging software or storing the transformed, insightful data in a database.

Cassandra

Apache Cassandra is a distributed, scalable, and highly available NoSQL database. It is a leading technology in its field and is used to manage some of the world’s largest datasets in clusters as well as being deployed in multiple data centres. The most common use cases for Cassandra are product catalogues, IoT sensor data, messaging and social networks, recommendations, personalisation, fraud detection and other applications such as time series data.

A Cassandra cluster comprises of a group of nodes that store data to optimise both reading from and writing to the database, ensuring even distribution across the nodes within the cluster. Data storage is managed through a primary key, which acts as a unique identifier for each piece of data. The primary key in Cassandra is made up of a partition key and a clustering key. The partition key determines which database server contains our data, whilst the clustering key organises this data within each server, thereby optimising storage for easier retrieval later.

Real Use Case

An example of an implementation that uses the Kappa architecture to process data in real time from IoT devices can be seen in this thesis, where a data stream emitted from thousands of IoT sensors found in a fleet of delivery vehicles is processed in real time. Before implementing a distributed architecture, the company had to wait for a whole day before being able to process all the information coming from these IoT sensors to obtain statistics on their drivers’ performance. After implementation, they can now obtain these statistics immediately thanks to the real-time data stream processing engine.

An additional problem that can be solved by using a distributed architecture is scalability, and this was also the case in this implementation: the original processing system would not have been able to deal with a significant increase in sensor data, but now the company can, simply by adding additional processing power when necessary.

Conclusion

As we’ve seen, the different technologies and the Kappa architecture presented in this blog post provide companies with the ability to enhance their data extraction process. These technologies can provide more insights into data at a quicker and more efficient pace, but they also come with a higher grade of complexity. The following table presents the advantages and disadvantages of a scalable and distributed architecture:

Advantages

Speed and Performance: Parallel computing can significantly improve speed and performance.

Scalability: Distributed computing allows you to scale by adding more machines or nodes as needed.

Fault Tolerance: Even if one machine fails, the system can continue to operate.

Resource Utilisation: Computing makes efficient use of multi-core processors.

Cost-effective: Distributing workloads across multiple low-cost machines can be more cost-effective than investing in a single high-end machine.

Disadvantages

Code Complexity: Parallel code is significantly more complex.

Communication Overhead: Communication between nodes can introduce latency and overhead.

Architecture Complexity: There are challenges like load balancing, data consistency, and fault tolerance.

A distributed and scalable Kappa architecture offers the right tools to deal with modern Big Data processing. What’s more, a scalable system allows you to provision resources as necessary, reducing infrastructure costs during periods of lower demand. The flexible, distributed, and scalable architecture gives companies the ability to process huge amounts of data in a limited amount of time to provide an efficient delivery of perceptive performance indicators derived directly from raw data.

A significant data transformation to an architecture like this is an intricate process and requires a lot of thought and meticulous preparation. For further information and guidance, take a look at this dedicated previous blog post. Should you have any questions, don’t wait a minute longer – just contact us and we’ll be happy to help!