23 Feb 2017 DATA QUALITY WITH EDQ – PART 2: DATA STANDARDIZATION

In the first post of the Data Quality Series we introduced the main concepts of data quality. In the second article we explained how Oracle Enterprise Data Quality (EDQ) can help us profile our data. The focus in this post is to review some of EDQ’s basic cleansing and standardization capabilities.

In the first post of the Data Quality Series we introduced the main concepts of data quality. In the second article we explained how Oracle Enterprise Data Quality (EDQ) can help us profile our data. The focus in this post is to review some of EDQ’s basic cleansing and standardization capabilities.

Introduction

EDQ provides a number of built-in transformations for data cleansing, and some of the most commonly used transformations are:

| • | Character Replace: replaces characters according to a map |

| • | Denoise: removes noise characters from text attributes |

| • | Lower Case: converts string values to lower case |

| • | Upper Case: converts string values to upper case |

| • | Proper Case: converts string values to proper case |

| • | Normalize No Data: normalizes attributes with no data to nulls |

| • | Normalize Whitespace: removes leading and trailing whitespace, and normalizes inter-word whitespace to a single space |

| • | RegEx Replace: replaces a string value matching a regular expression with a given value, or part of the matching expression |

| • | Replace: replaces values using a map |

EDQ also comes with a series of built-in processors for data type conversion that make the normalization of types in our data really easy. There are also transformations that can be used to enhance our data, for example by adding dates, concatenating or parsing fields, or adding initials from text values. Maps can be very useful for data standardization; by keeping all the possible values representing an entity together with the corresponding standard value for that entity in map tables, we can standardize the data using EDQ in just a couple of simple steps. In the following example, we will see how we can improve the quality of two common data fields containing people information: Country and Gender.

1. Data Standardization with EDQ

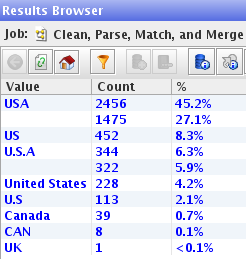

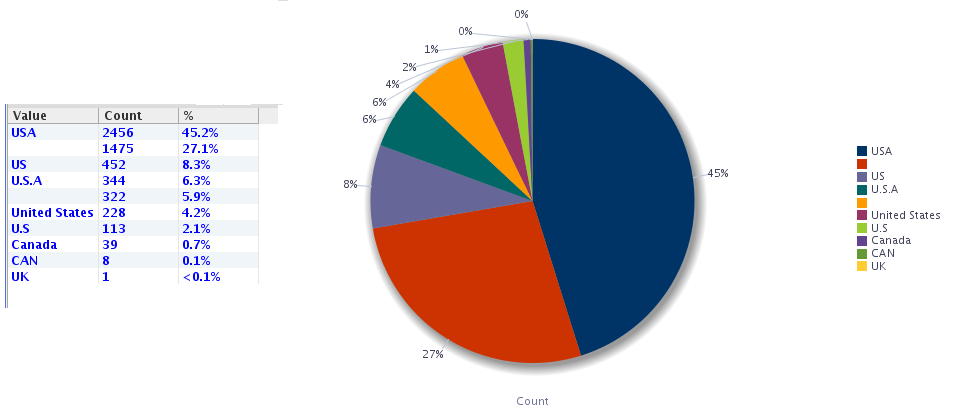

Let’s suppose that we have a dataset with a Country field. The table should contain data mostly for the United States; however, a quick Frequency profile shows the following results:

|

Figure 1: Frequency profile of the Country field. We can see that some countries are represented by more than one value in the database. |

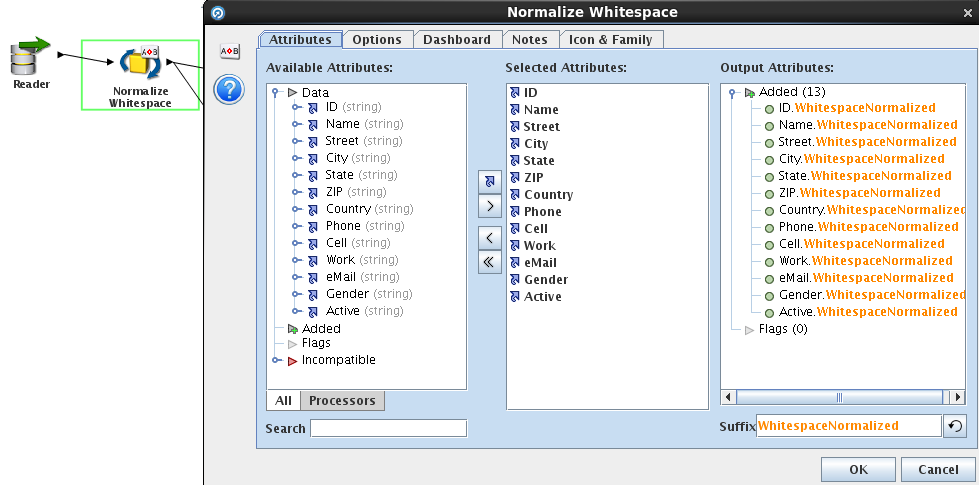

We can see that we have very bad data quality for this field: the values ‘USA’, ‘US’, ‘U.S.A’, ‘United States’ and ‘U.S’ all belong to the single entity that we would like to call ‘United States of America’. Moreover, there are two different values for Canada (‘Canada’ and ‘CAN’), and also some null values. With EDQ, we can easily fix issues like this using mapping tables. The first transformation that we need is the Normalize Whitespace processor, which removes leading and trailing whitespace, and normalizes inter-word spaces to a single space. Simply create a new process, add the transformation, connect the Reader output to the normalizer input, and select the fields that need whitespace normalization:

|

Figure 2: Normalize Whitespace processor in EDQ. Before processing text fields for standardization, it is recommended to remove leading and trailing spaces and to normalize inter-word spaces to a single space. |

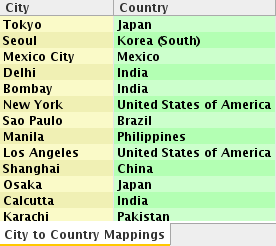

We will generate the values for the null ones from another field containing the city, using an external mapping called City to Country Mappings. This table contains a mapping of cities to their corresponding countries:

|

Figure 3: City to Country Mappings |

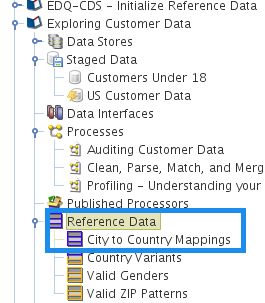

To use it in our project, we just need to add it to the Reference Data section:

|

Figure 4: Adding Reference Data |

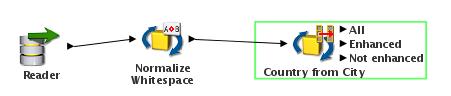

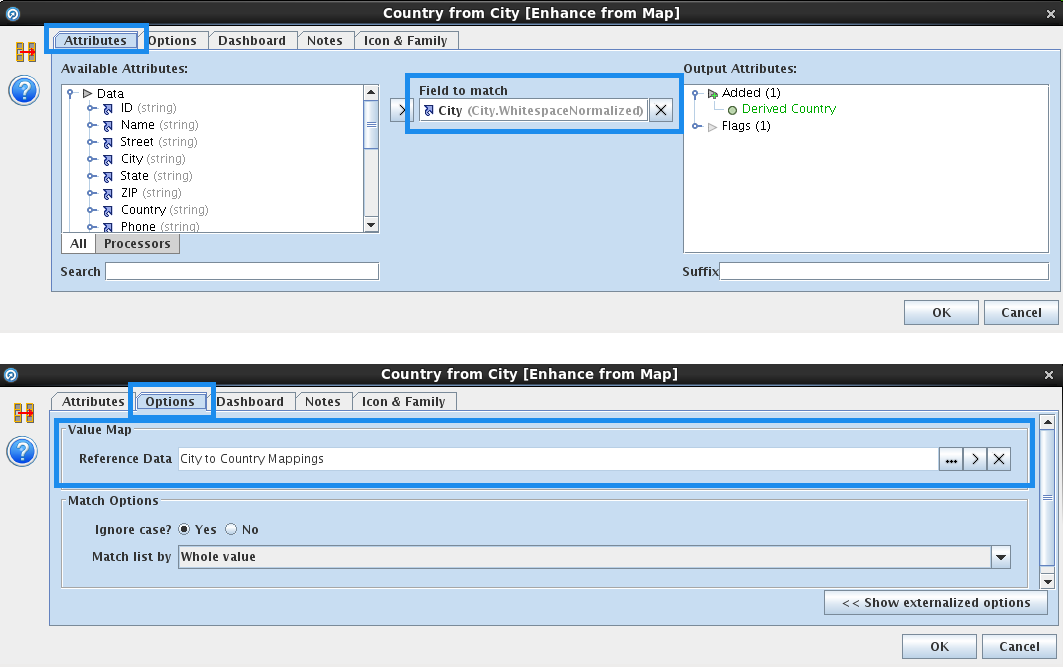

We will use the mapping to generate the name of the country in a new column called “Derived Country” for each city in our dataset. To do this, we add the processor Enhance from Map, connected to the output of the Normalize Whitespace processor, using City as the field to match, and the added mapping:

|

Figure 5: Process for normalizing whitespace and populating the Country field in EDQ |

|

Figure 6: Enhance from Map processor in EDQ. We will populate the “Country” field using the “City to Country” mapping, matching by the field “City”. |

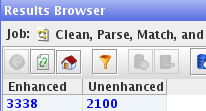

After running this process, we can see in the Enhance from Map processor results that we were able to enhance 3338 fields:

|

Figure 7: Results of the country enhancement using the City to Country Mapping in EDQ |

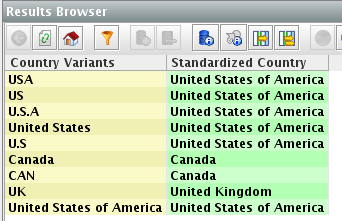

However, 2100 records remain with an unenhanced country, so we still have work to do on this field to make it fit for use. The next step is the normalization of the name, using another mapping called Country Variants. This mapping contains the most common variants for ‘United States of America’, plus variants for Canada:

|

Figure 8: Country Variants mapping. The Standardized Country column contains the standard that we want to use for each real world entity, in this case countries. |

To normalize these different variants, we add a Replace processor to the process, calling it Standardize Country:

|

Figure 9: Country Standardization process in EDQ. |

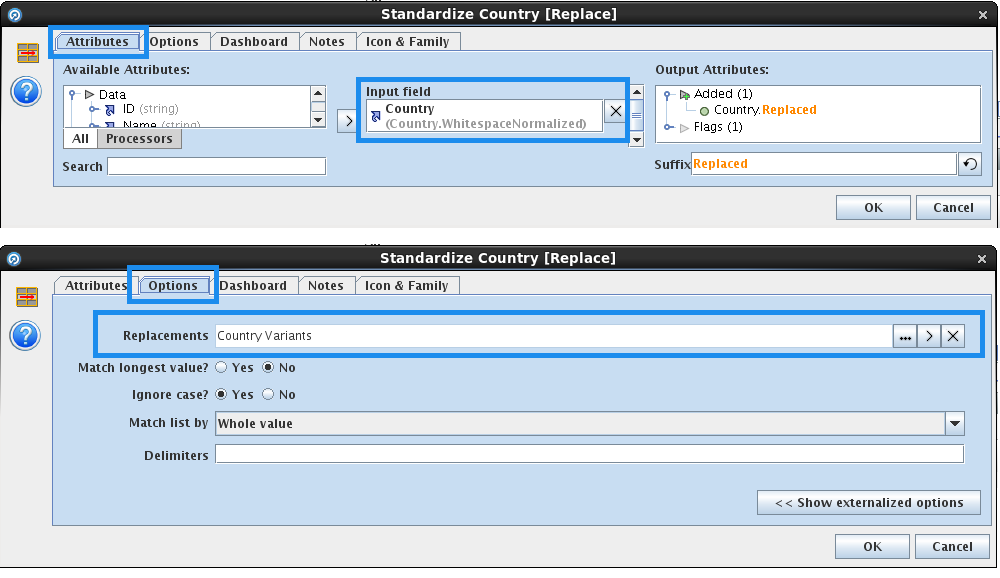

In the properties of the transformation, we need to define Country as the input field (this is the field that we are standardizing), and we have to specify the replacements in the Options tab. We will use the mentioned Country Variants mapping, containing the replacements.

|

Figure 10: Replace processor in EDQ. The processor will replace the original value of the Country field with the standard from the Country Variants mapping. |

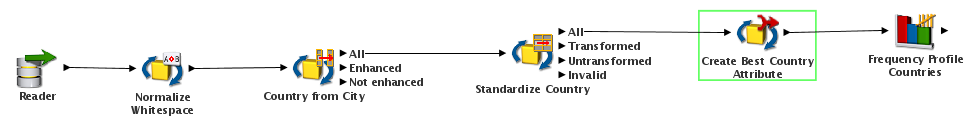

Finally, we simply add a Merge Attributes processor to merge the country derived from the city and the standardized country into a new column, ‘Best Country’, and a Frequency Profile processor to check the results:

|

Figure 11: Complete Country Standardization process in EDQ. The Merge Attributes processor, called “Create Best Country Attribute”, merges the values of the country derived from the city and the country obtained after standardization using the Country Variants mapping. |

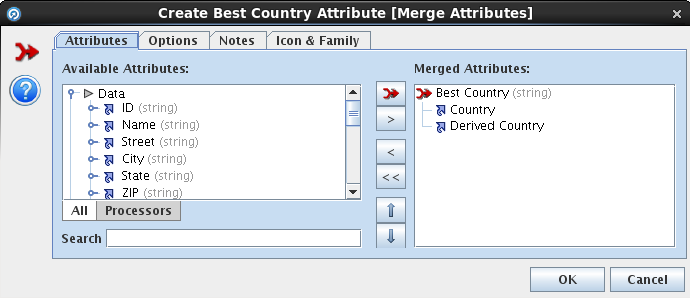

The transformation will merge the derived country with the standardized country into a new column called “Best Country”:

|

Figure 12: Merging attributes in EDQ. |

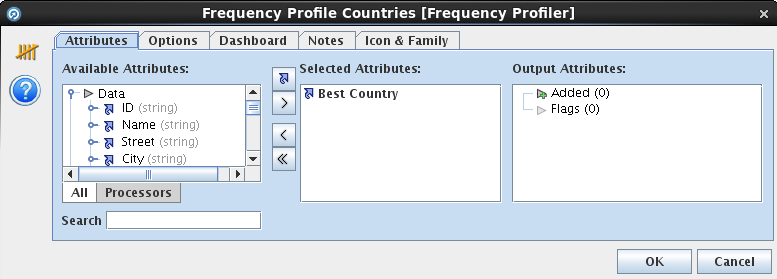

With a Frequency profiler we can analyse the results of the new field:

|

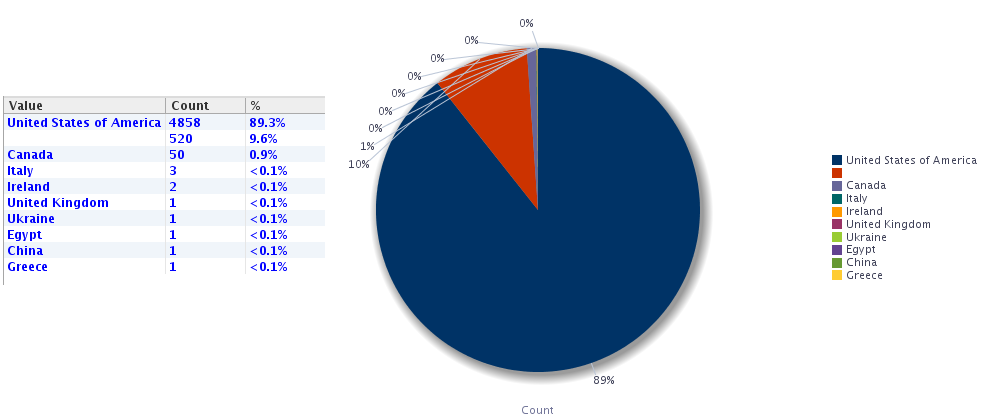

Figure 13: Frequency profiler to verify the results of the standardization of the Country field in EDQ. |

We can see how the quality of the field has improved thanks to the transformations applied:

|

Figure 14: Country Frequency profiler before standardization in EDQ, showing null values and many values for the same countries. |

|

Figure 15: Country Frequency profiler after standardization in EDQ. |

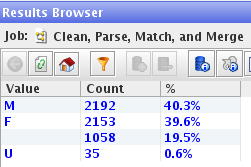

We were able to eliminate all the variants of United States of America, and we also identified other countries that were not appearing correctly before the standardization process. Now let’s suppose we have a dataset with people including a field for Gender, which should contain either ‘M’ or ‘F’ (Male or Female) for each record. However, a quick profiling of the field shows the following results:

|

Figure 16: Gender frequency profiling in EDQ. |

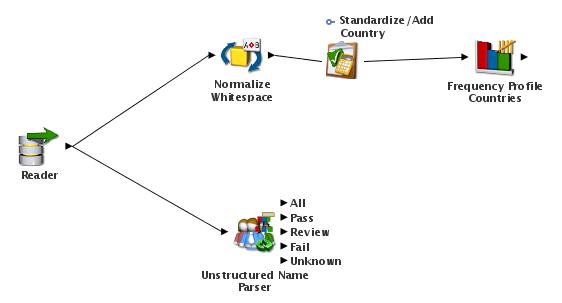

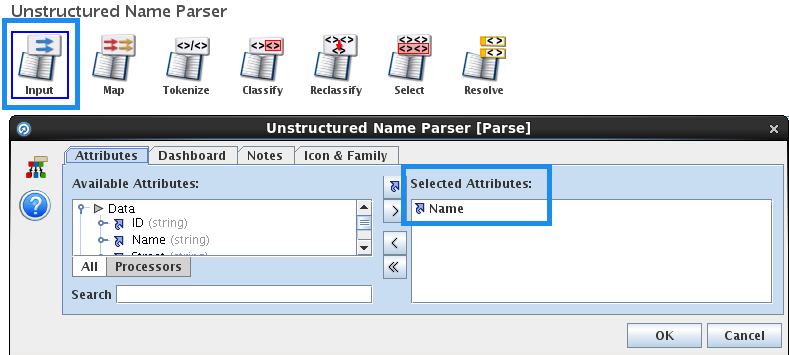

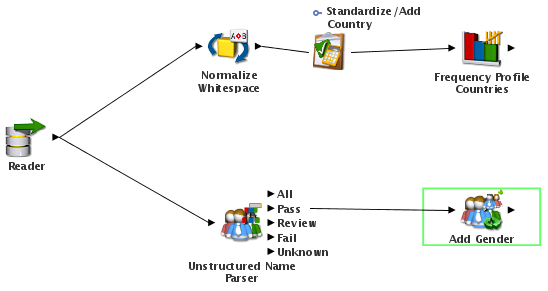

We can see that we have a lot of null values, and some records with ‘U’ in the gender field. We’re going to improve the quality of the field with EDQ, by trying to generate the gender using the title and the first name fields, and merge this generated gender with the Gender field available in the data to get the best result. We’ll begin by adding an Unstructured Name Parser. We will use it to parse the name field, so that will be the input of the transformation, specified in the Input sub-processor of the Parser processor:

|

Figure 17: Parsing text fields with EDQ. |

|

Figure 18: Input sub-processor of the Unstructed Name Parser processor in EDQ. The input is specified here. |

The name will be mapped to the only attribute of the processor called “FullName”:

|

Figure 19: Map sub-processor of the Unstructed Name Parser processor in EDQ. The FullName attribute is mapped to the input field Name. |

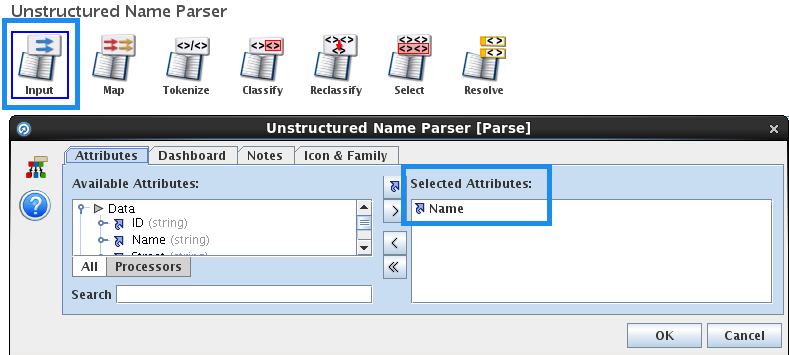

After running the process, we can see all the steps taken and all the rules created in the results of the parser in order to parse the name field into three new fields: P1_Prefix with the title, P1_First with the first name, and P1_Last with the last name, for each record:

|

Figure 20: Results of the Unstructured Name Parser, with the fuzzy rules created to parse the string containing the name into three separate fields for the title, first name, and last name. |

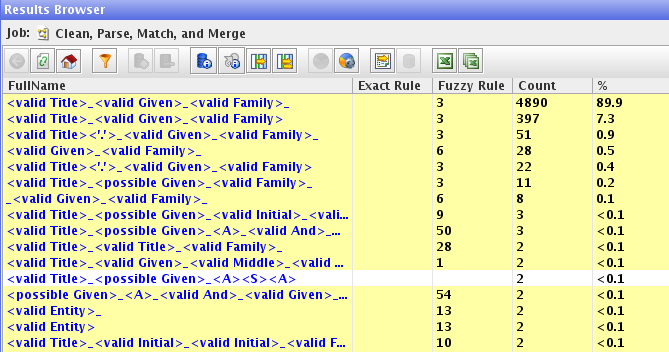

Now we can use these fields to derive the gender of each person in the dataset. To do this, we add the built-in transformation Add Gender to the process, and join it to the pass output of the parser transformation:

|

Figure 21: Enhancing a Gender field in EDQ. The process contains a parser transformation and EDQ’s built-in transformation for adding the gender. |

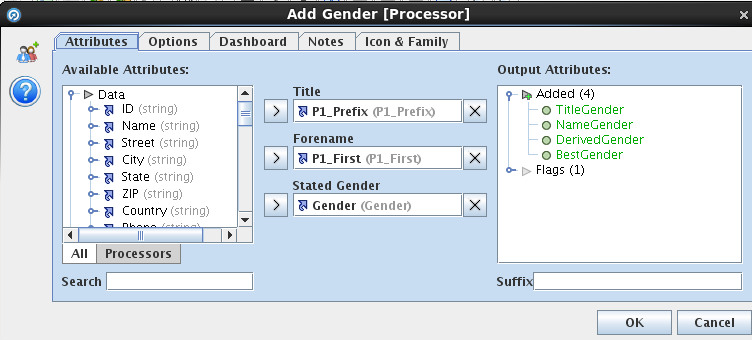

To derive the gender, we need to specify the fields that contain the title and the first name in the processor configuration, as well as the stated gender, which the processor will use in combination with the derived gender to create the output “Best Gender”.

|

Figure 22: Enhancing a Gender field in EDQ. The Add Gender processor requires three attributes: title, first name, and stated gender. |

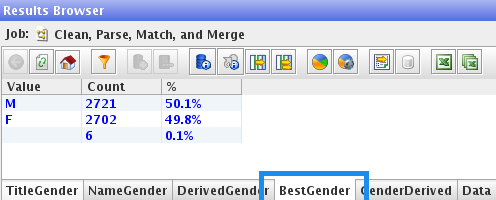

If we run the process now, we can see how we have improved the quality of this field in the results of the Add Gender processor:

|

Figure 23: Results of the Gender standardization in EDQ. |

Now there are only 6 records with missing gender, and we have reduced the percentage of missing gender records from 19.5% to 0.1%.

Conclusion

EDQ provides some solid features that make data cleansing and standardization tasks much easier: we can apply built-in transformations, use external maps, and apply business rules to standardize, correct wrong values, normalize fields, and enhance our data. You can try these and other usage examples of EDQ for standardization downloading the latest EDQ virtual machine and hands-on lab from the following links:

| • | Latest EDQ virtual machine from Oracle |

| • | Getting Started Hands-On Lab |

Our last article, which is the last in the series, is reviewing the basic data deduplication capabilities of EDQ. Click here if you would like to know more about the Data Quality Services we offer!