23 Sep 2013 Twitter and Oracle Endeca Information Discovery

PART 1: Twitter Extraction, Integrator Transformation and Endeca Loading

In the last years we have witnessed how the explosion of social media, rather than just providing an easy way of generating and publishing new personal content, collaterally offers a real-time source of information that shouldn’t be wise to ignore by any company that aims for customer satisfaction (and which isn’t?). That´s why today we want to talk about Twitter and Oracle Endeca Information Discovery.

Nowadays, Twitter is the most representative example of social network providing real time data coming from users all over the world that includes their reactions, opinions, criticisms and or praises to any social event such as a new product release or a football match.

The main drawback of those kind of data sources is that they are heterogeneous, unstructured and some processing needs to be done in order to extract useful information from it. That’s when Oracle Endeca Information Discovery (OEID) comes into play.

This blog article will explain how to capture fresh real time Twitter data in a football match scenario and feed it to Endeca in order to perform unstructured text enrichment and analytics.

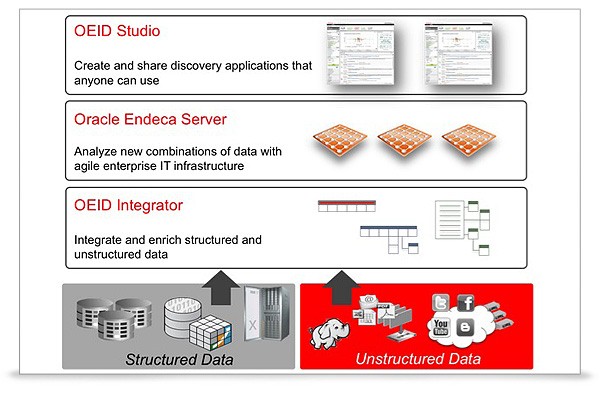

Oracle Endeca Framework

Three main components can be identified in the OEID platform: the Oracle Endeca Server, the OEID Studio and the OEID Integrator.

- Oracle Endeca Server. Stores the data into a key-value pair data model enabling a flexible management of changing and diverse data types, fact that also reduces the necessity for up-front data modelling.

- OEID Integrator. Gathers and unifies diverse sources of data and also allows the enrichment of unstructured text such as entity and theme detection as well as sentiment analysis.

- OEID Studio. Allows the final business user to visualise and discover new insights by analysing the stored data through the intuitive building of interactive dashboards.

In this blog’s use case, the OEID Integrator will be used to parse and classify the raw Twitter data as well as to perform some text enrichment and sentiment analysis over the captured tweets content before loading them into the Endeca Server. Afterwards, the OEID Studio will be used to perform the analytics over the extracted information.

Twitter stream

The public streaming APIs provide low latency real time access to Twitter’s global stream of data. With a “filtering” API call, we are capable of opening a connection with Twitter through which we will be receiving all the tweets that match our query.

For our football match example, we wrote some Java code to capture all the tweets related to the last August 2nd match of the 48th edition of the Joan Gamper Trophy between F.C. Barcelona and Santos, focusing on the two most popular players (Messi and Neymar) with the following query:

https://stream.twitter.com/1.1/statuses/filter.json?track=messi,neymar,gamper

The analysis conducted in that article comprises between 19:45 and 0:00 CET (match started at 21:30). A total sum of about 180,000 multi-lingual tweets (mainly English, Spanish and Portuguese) were captured.

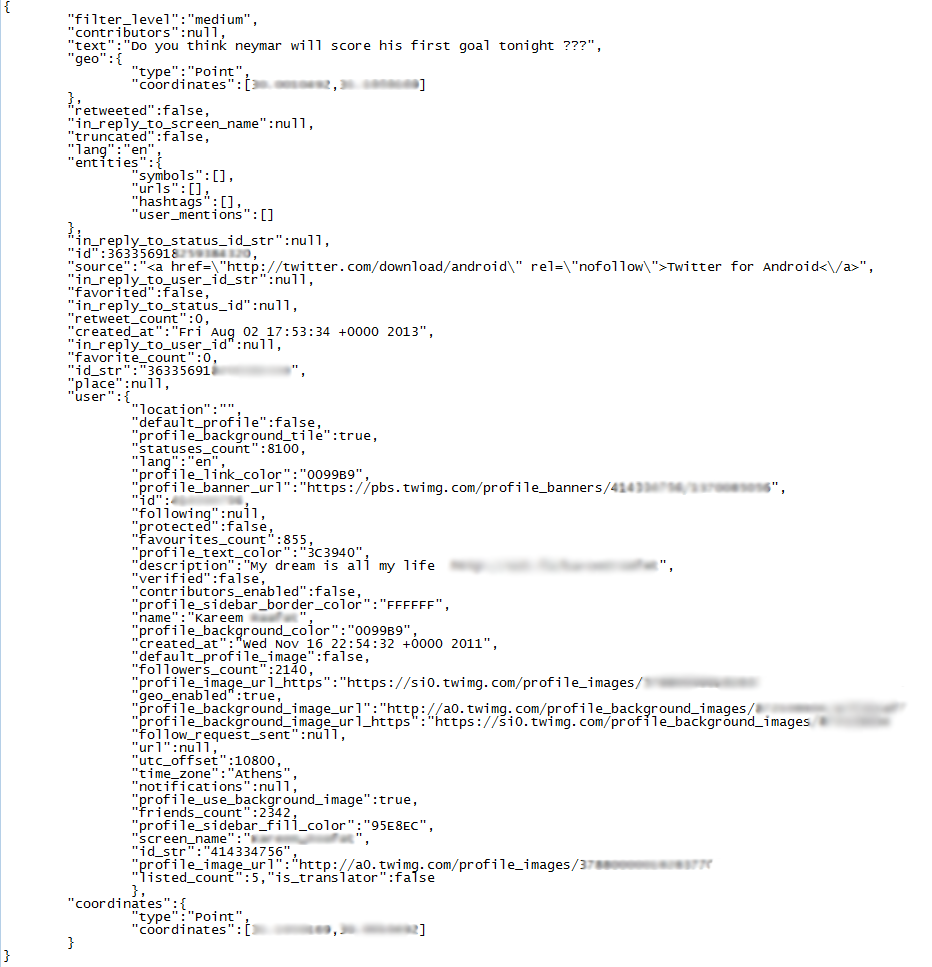

Tweets are received in JSON format, so some parsing was done “on the fly” while receiving from the stream in order to extract the most important characteristics from the tweets and the authors: tweet ID, date, text, coordinates, user ID, user name, user language, user location, and other useful information.

Loading tweets into Endeca

After building the data file containing the tweets captured from the stream and adding the influence information, it’s time to perform the necessary transformations in the data and load it into Endeca. That process, performed using the OEID Integrator, comprises the following steps (sample graphs from the “GettingStarted” demo project are advised to be used to perform common tasks such as domain creation or configuration):

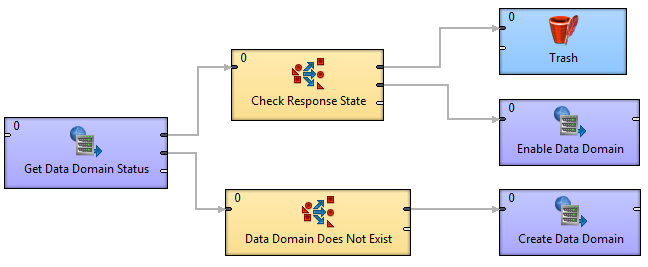

1. Initialise the data domain.

The graph in Figure 3 checks if the data domain is already created. If it is already, the domain is enabled and if not, it is then created.

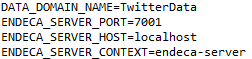

It is based in the “InitDataDomain.grf” graph from the sample “GettingStarted” project. For it to work, it is just necessary to change the adequate properties in the “workspace.prm” file with our Endeca Server and data domain properties:

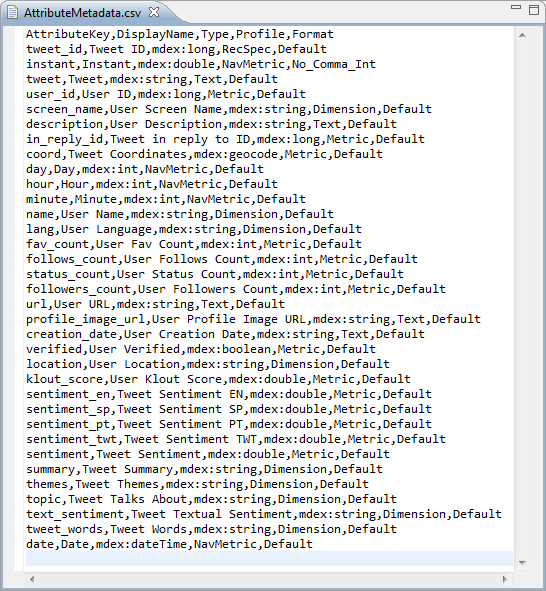

2. Load the attributes configuration.

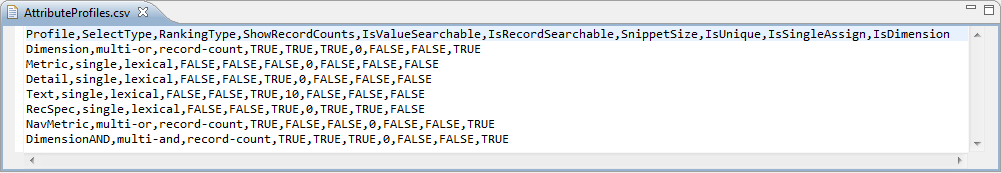

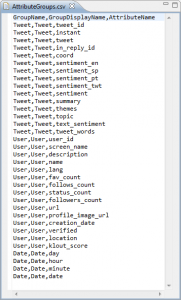

As I said before, it isn’t necessary to define the data model (hence Endeca flexibility). However, sometimes it is necessary to override some default attribute settings. Since the last version of Endeca, attributes are “single assign” by default (they cannot take multiple values for the same attribute). So, if for example, we want to store in a single field a list of words that represent the tags that define a tweet, we need to set the “IsSingleAssign” property of that attribute profile to “false”. The following images show the content of the configuration files in our case scenario (profiles, metadata and groups).

Figure 6: Attributes metadata and groups configuration files

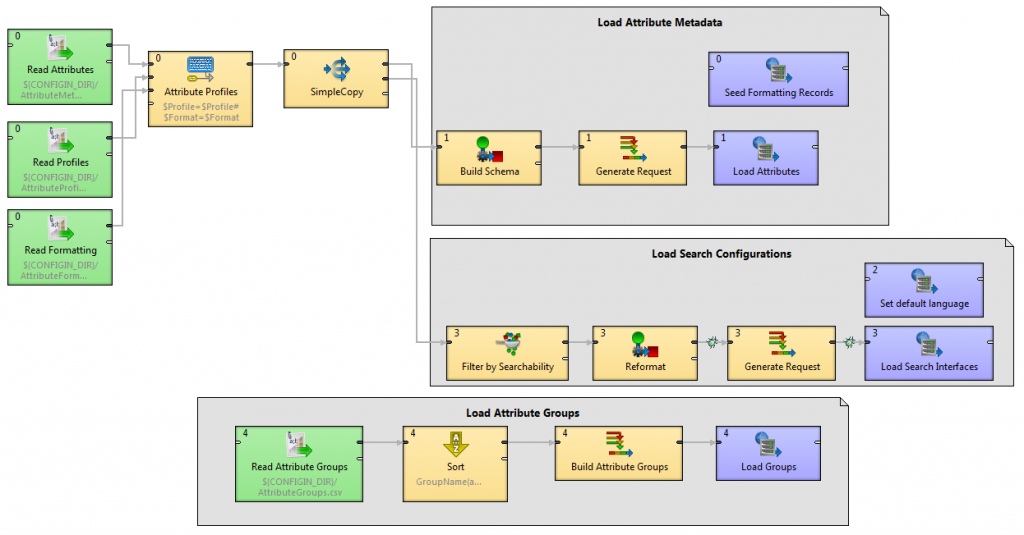

“LoadConfiguration.grf” graph from the demo project can also be used here to correctly set-up the attributes properties, metadata, groups and basic search configuration (see Figure below). Some of those configurations can be changed later on by running a modified configuration graph or directly through the OEID Studio.

Figure 7: Attribute configuration loading

3. Transform and load the data.

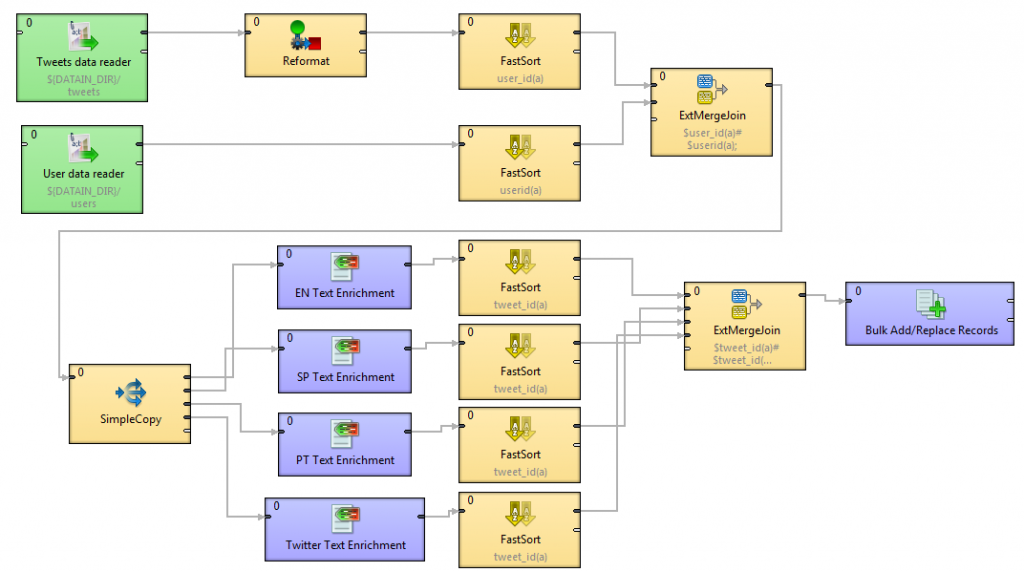

The last step is to manage the captured data from twitter, pass it through the text enrichment components in order to obtain semantic information (like the topics the twitter status are talking about or the sentiment analysis) and, finally, load everything to Endeca server.

Figure 8 depicts the ETL graph of that stage. Data extracted from the twitter stream was stored in two separate data files: tweets and users. Both data flows are merged and then processed through four text enrichment components. These elements are in charge of calling the external Lexalytics[2] analysis module to extract the sentiment, named entities and themes (among other information) from the tweets text in three different languages (English, Spanish and Portuguese) and through a special data set prepared for analysing Twitter messages. Afterwards, data processed is merged together and loaded into the Endeca server ready to be used in the Endeca Studio to perform the analytics.

Finally, the data domain in the Endeca Server is loaded with our data ready to be used through an Endeca Studio application to start extracting useful information. In the next blog post, I will talk about how to build an OEID Studio application.

Read the second part of Twitter and Oracle Information Discovery here:

[1] Lexalytics Text Analysis Software Engine: http://www.lexalytics.com/.