29 Nov 2012 Boosting OBIEE project performance with Exalytics

As part of the Oracle Engineered Systems portfolio, Oracle Exalytics is as of today under the radar of all companies looking for performing, state-of-the-art BI platforms.

In fact, Exalytics blends specifically chosen hardware components with unique versions of Oracle Business Intelligence software to provide best in class performance by leveraging in-memory database techniques. If you want to read more about Exalytics, click here.

We have been recently working with customers to test the appliance capabilities on their actual OBIEE project implementation. The purpose of this blog entry is to share with you the main challenges and achievements coming from a real-life by the name of realistic Exalytics deployment scenario.

The initial setup of the appliance usually includes the installation of the appliance software (OBIEE, TimesTen and Essbase) and its configuration. But still many challenges remain, for example:

– Develop an “aggregate creation strategy” that covered the particular needs of the customer. As the Production environment is usually owned by the customer’s IT team, you also need to make sure that they are comfortable with it.

– Put in place and document a shared methodology between the development and DBA teams to manage the TimesTen aggregates life cycle.

– Demonstrate the improved performance of the new environment using Exalytics over the previous one (i.e. running the same test analysis on the two environments and measuring the time results)

Although it is likely to encounter a few more bumps on the road to deploying Exalytics, it can be said that those three points are the main concerns to take into account to demonstrate the appliance capabilities and gain the customer’s trust in it.

Let’s now review the actions taken to tackle each point.

Develop an aggregate creation strategy

The main mechanism used by Exalytics to improve the overall performance is to provide an “adaptive memory cache” where aggregated information is stored for faster retrieval. This is not a groundbreaking technique, as this paradigm has been used consistently in BI projects for a long time, usually leveraging database materialize views and query rewrite capabilities. However, provided the huge amount of RAM available (usually around 400 GB) we can load a high quantity of aggregate data at several different levels into the appliance memory using the TimesTen instance. Once the aggregates are loaded in the memory, OBIEE fragmentation techniques allow the BI Server to route the user queries to Times Ten when the level of granularity requested meets one of the aggregates available.

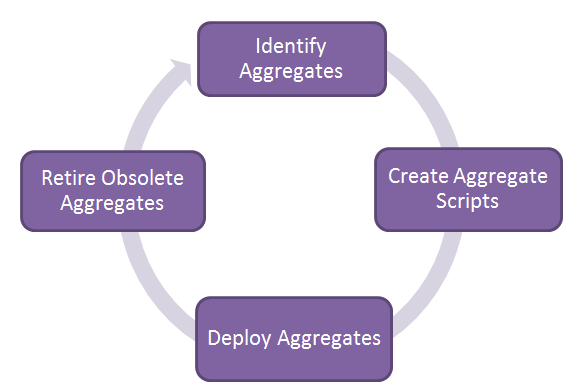

Summarizing, all that matters is having the correct aggregates loaded into the Times Ten memory. These aggregates will be created, get refreshed and eventually become obsolete in what it’s called the “aggregate life cycle”.

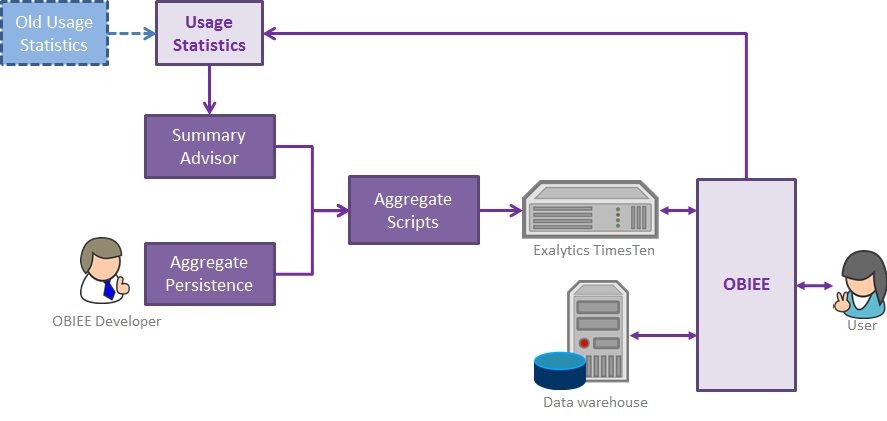

Exalytics provide us with two main techniques for creating aggregates. One is the Summary Advisor, a new tool created for Exalytics that will use present and past usage tracking statistics to suggest the best suitable aggregates for our OBIEE deployment. The other is the previously seen Aggregate Persistence wizard that would allow us to create tailored aggregates at the necessary levels of details and deploy them into the TimesTen instance.

Summary Advisor is the recommended option but it relies heavily on the gathered statistics. Therefore it is extremely useful when deploying new capabilities on a live Exalytics environment.

However in case of brand-new production environments, usually in a state of controlled rollout, the pool of usage tracking statistics is not big enough for the summary advisor to suggest any meaningful aggregate. So the only way is to create them manually using the Aggregate Persistence.

Although it may seem a big drawback, it has its advantages as it offers the data modeler full control on the desired level of detail.

Nevertheless, as soon as more and more statistics are gathered through use, we suggest a shift to the Summary Advisor as the preferred way of creating aggregates.

Create a methodology to manage the aggregates “life cycle”

So far, the development team has the non-trivial mission of discovering and creating the aggregate scripts using the Aggregate Persistence wizard. Once generated, the scripts are passed to the DBA team who are responsible for executing the scripts that will actually create and deploy the aggregates.

We believe that this method is very convenient for customers that count with separate developers and DBA/systems teams. It allows the developers to focus on the task of interact with the business users in the search of query patterns and at the same time push the technical TimesTen administration and troubleshooting to the DBA team.

The DBA team not only needs to execute the scripts. The information on the TimesTen instance needs to be refreshed every time the source data is refreshed.

It could be convenient, therefore, to develop an ETL process to run nightly. The purpose of this process is to update the data warehouse with the information of the previous day. Just after the ETL batch finish we can refresh the TimesTen aggregates.

However, at the moment of this writing there is no built-in method to schedule and monitor these scripts. The best solution that we can suggest as of today is to schedule them on the Exalytics system via crom. The solution is not the optimum, but it does the job.

However we think that in the near future we’ll see more Exalytics deployments that leverage the use of Oracle Data Integrator or Oracle GoldenGate to use full ETL processes to create and refresh the TimesTen aggregates rather than use the scripts generated by the Aggregate Persistence.

Demonstrate the performance improvement over the old system

Once the aggregates started flowing towards the TimesTen database the last question is clear. How does it perform? How faster it really is?

We did some tests comparing the Exalytics performance against an existing Development environment, and apart the obvious differences in hardware performance (which is anyway one of the reasons to go for Exalytics – the sheer power of the machine!), the difference is evident. Witnesses of the testing dashboard were really amazed with the results. It’s blazing fast. We experienced consistently sub-second latency on the analysis that fetched the data from the memory, leading to a sure great user’s experience.

But we wanted to go beyond first appearances, so we ran the maths on the queries comparing results with the older environment. To do this, we simulated three different scenarios and we measured the queries execution times.

Scenario 1: High aggregation level

In this scenario we simulated the possibility of having a high aggregate degree. That is, a base table of several million rows with results aggregated in several hundred rows.

We used a fact table containing 3M rows and we aggregated it at a high level down to 5K rows. Of course this is one of the best case scenarios and we got an amazing performance improvement on the queries of 270x times faster.

This kind of aggregation level is not usual, but we can observe it on very detailed data sets as for example inventory transactions, or financial journal movements. Analysis on this data is seldom performed at detailed level; users rather want to analyze it at higher levels of detail, making these aggregates of great use.

Scenario 2: Data already aggregated on the data warehouse

Looking at the results of the first test we wanted to see if it really matters to aggregate data that was already aggregated before the Exalytics arrival. We took a medium level old aggregated data set and we aggregated it a bit further into the memory. The base table contained around 5K rows and we aggregated it down to 500 rows.

The analysis running against the data warehouse was already near sub-second latency, and when executed against the TimesTen we measured values of tenths of second (around 50x faster). This improvement was barely perceived by our eyes, but it allowed us to demonstrate the good use of the new Trellis views on the Exalytics appliance, which kept the sub-second latency against TimesTen and took longer when rendered against the data warehouse.

Scenario 3: Detail data on the TimesTen memory

One thing that we have been asked to test was to fit the whole data warehouse into the TimesTen memory. This approach is not really the intended way when using Exalytics and depending on your RPD modeling techniques might not be completely feasible. Anyway, we wanted to test how TimesTen will perform when reporting over big tables contained detailed data.

To simulate this, we created a script using the Aggregate Persistence wizard selecting all dimensions at detail level and then loading the “aggregate“. This resulted on a nearly 2M rows table loaded into the memory, a much bigger table than the previous aggregates. When running the test dashboard with aggregated analysis over this data set we observed an improvement of 10x faster times.

The results yielded were expected however, we face fastest data retrieval but nothing more, as the data has to be aggregated by the TimesTen and this can be even slower than some other RDBMS.

Conclusion

After been working on enabling Exalytics on a real implementation of OBIEE we have an overall positive feeling. The appliance delivers what it’s meant to deliver, lightning fast analytics. It’s true however that some points of the aggregate life cycle could be polished, and we hope Oracle to start working on those flaws thanks to the feedback submitted to them.

Summarizing, the best features that Exalytics provides to existing OBIEE customers are:

1. Easy deployment and configuration of the Appliance done by Oracle advanced customer support.

2. Straightforward integration with your current OBIEE solution

- If you already have statistics you can leverage Exalytics since the first day using Summary Advisor.

- If you don’t have statistics (i.e. new OBIEE deployment) you will be able to create on-demand aggregates, a more time demanding process.

3. Consistent sub second latency when hitting the TimesTen memory.

4. Excellent user experience thanks to the good performance and the new visualizations and improvements of OBIEE for Exalytics.

If you want to talk about your Exalytics deployment or if you are thinking on acquiring one of them, drop us a line at info@clearpeaks.com and we’ll be happy to help you.