28 Jun 2017 DATA QUALITY WITH INFORMATICA – PART 3: DATA DEDUPLICATION

In the previous article in the series about Data Quality with Informatica we learned that Informatica has a lot of useful features to standardize data, and that it is a very user-friendly tool whilst still offering enough flexibility to perform complex standardization tasks. In this article, we´ll focus on Data Deduplication.

In the previous article in the series about Data Quality with Informatica we learned that Informatica has a lot of useful features to standardize data, and that it is a very user-friendly tool whilst still offering enough flexibility to perform complex standardization tasks. In this article, we´ll focus on Data Deduplication.

Introduction

The process of data deduplication consists of two parts: the first is data matching, where we try to find duplicates or the likelihood of records being the same, and from which we obtain a score of similarity between records. The second part of the data deduplication process is data consolidation, that is, the deletion of the records that we have identified as duplicates whilst keeping the masters.

1. Record Matching

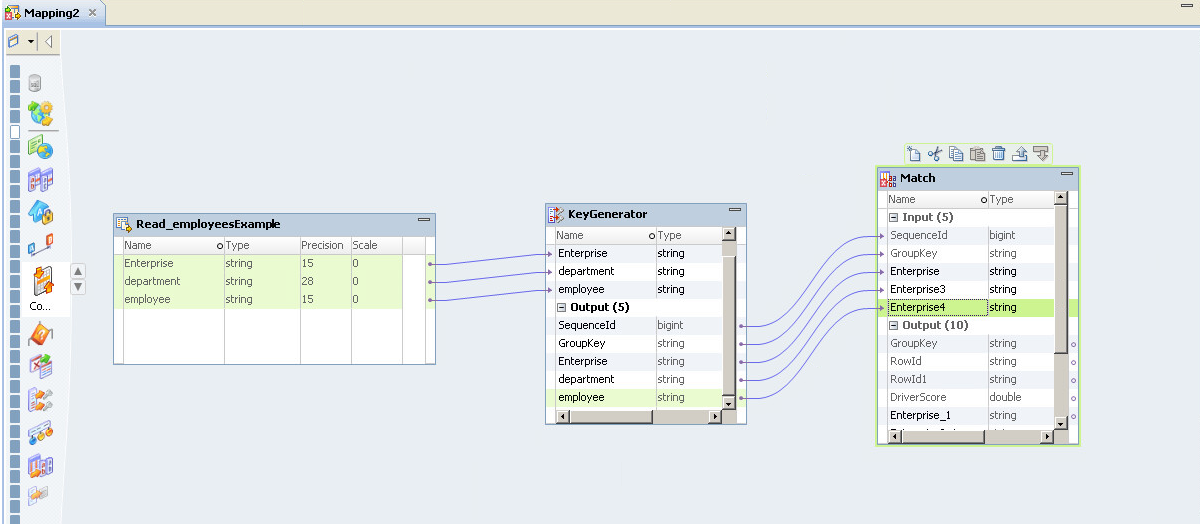

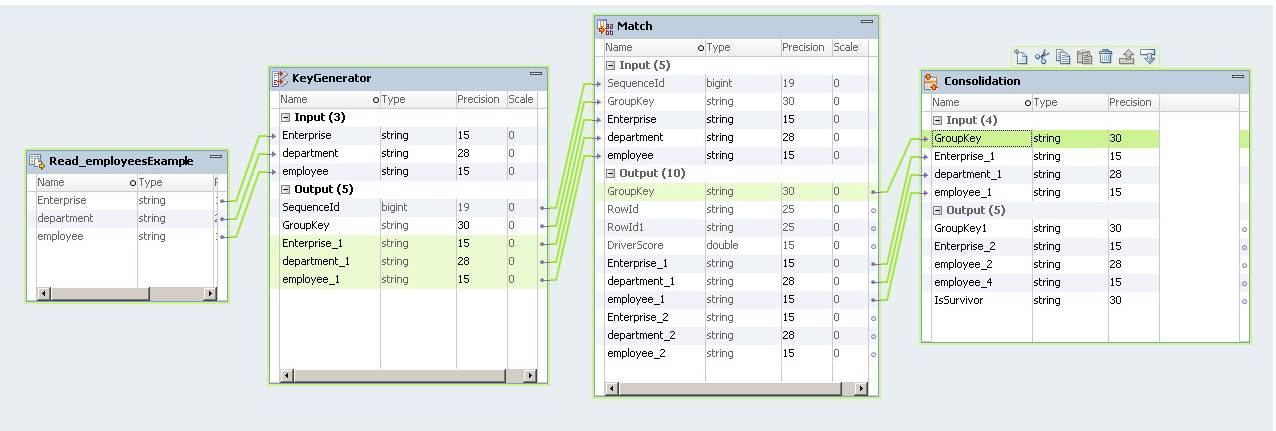

In this section we are going to explain some Informatica transformations that simplify the process of finding duplicates in our data. The process of data deduplication may use a large amount of resources, since we will be making many comparisons in a large data file, so bearing this in mind, the first step is to group the data so we can make the comparisons over small groups and not the whole dataset. In order to do this, Informatica provides the key generator transformation; grouping the data before the matching process will significantly reduce the time needed when looking for matches in the dataset. Create a mapping and add the file input, a key generator transformation and a match transformation. The output of the key generator will be the input for the match transformation. We will review the data using the data preview, so there is no need for a valid mapping with a defined target.

|

Figure 1: Mapping for record matching |

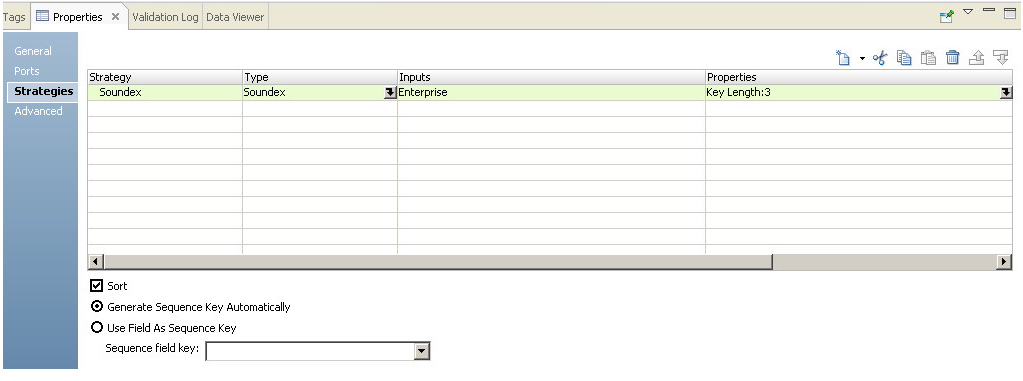

The first transformation is intended to reduce some groups in order to make the match transformation more efficient; we are going to configure the key generator transformation to generate a new sequence and a Soundex strategy. Soundex works for characters in the English alphabet, using the first character of the input string as the first character in the return value and encodes the remaining three unique consonants as numbers. We will use the enterprise column in the strategy:

|

Figure 2: Configuration of the KeyGenerator transformation to use the Soundex strategy |

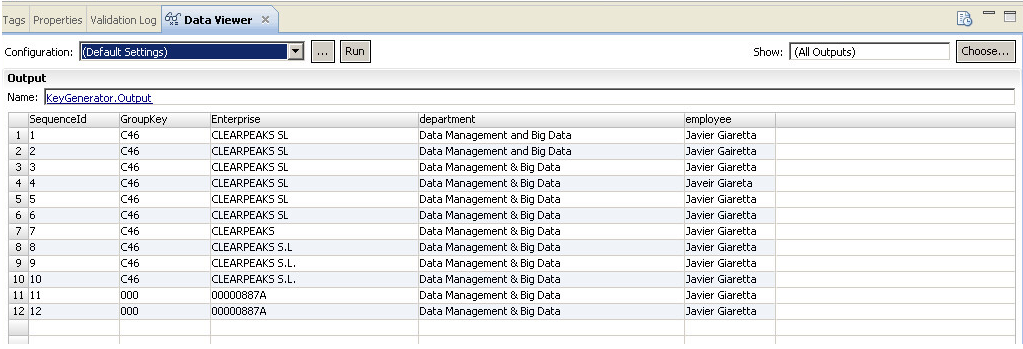

Now we can preview the data in the key generator transformation, and we can see that there are several values that are likely to be duplicated, as well as two groups that have been generated according to the Soundex strategy:

|

Figure 3: Results preview of the KeyGenerator transformation |

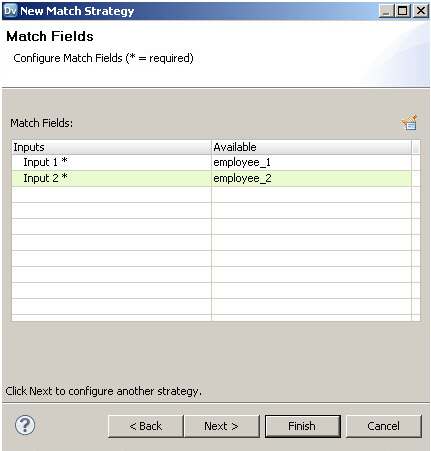

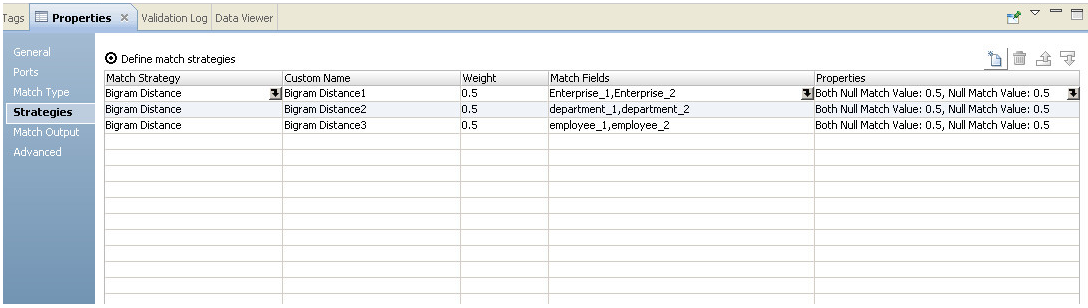

Now we are going to add three strategies to the Match transformation, using the Bigram distance for field matching. We can select four different measures of distance between the fields, Bigram, Edit, Hamming Jaro and Reverse Hamming Each of these is intended for specific cases and will work better or worse depending on the values of the column. In this case we are going to use Bigram, as the values are small. The transformation creates a temporary column to compare the values, so in every strategy we select the original port and the temporary one, named <port_name>_2 :

|

Figure 4: Configuring the fields that will be used in the record match strategy |

We are going to create three strategies using the same distance, so all three columns in our file will be taken into account to find the duplicates. The first strategy will measure the likelihood for the enterprise column, the second for the department and the third for the employee:

|

Figure 5: Configuring strategies for record matching |

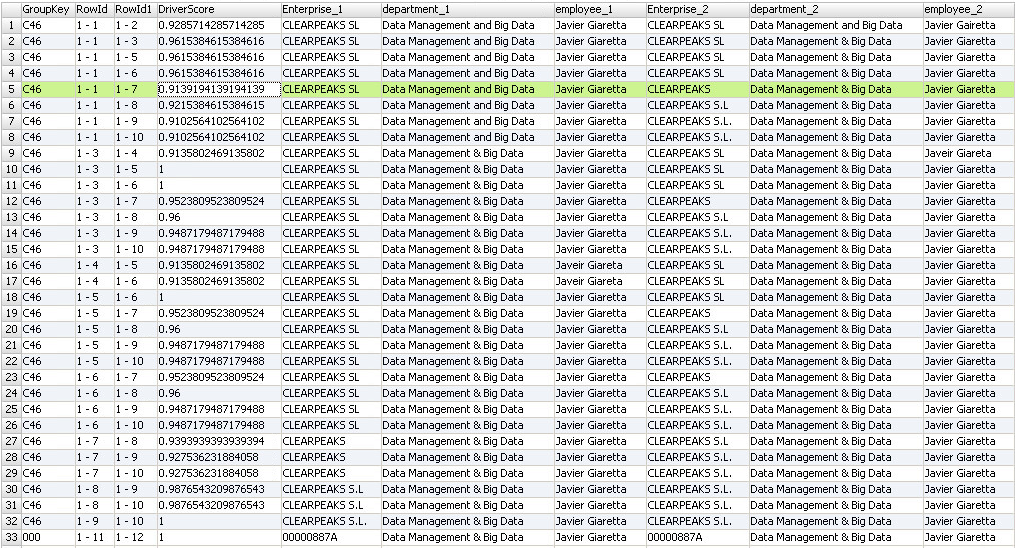

Once we have defined the strategies we can preview the data of the transformation and analyse the results. The output of the transformation only shows the records that have some probability of being duplicates, and the driver score shows the degree of likelihood of being duplicates. Depending on the number of strategies defined for the columns we will view more columns in the results (two additional columns per strategy), and the values are the value of the record and the value of the record it has been compared to:

|

Figure 6: Results preview of the Match transformation, showing likelihood scores for three different strategies |

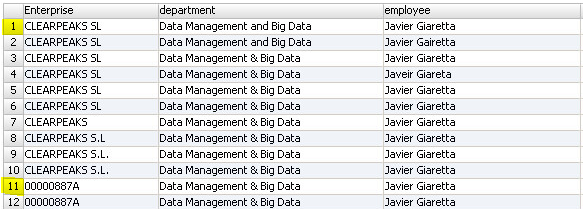

The degree of similarity is shown in the driver score column, with 1 being a perfect match. We can adjust the weight of each different strategy to give more importance to some columns. Looking at this result we can see that the first record (1-1) matches with the second record of the file (1-2) with a score of 0.9257, and that the difference comes from the employee name, as it contains a typo error in the second row of the file (employee_2). We can also see that there are perfect matches for rows 3, 5 and 6 (as the output score is 1) and that columns 11 and 12 are duplicated, so we could consolidate the original file into a couple of records, keeping just the highlighted rows in the source file:

|

Figure 7: Preview of the data showing a large number of duplicates. After the consolidation only the highlighted records will be maintained. |

At some point we will have to decide which are the valid records that we should keep this process is known as data consolidation. There is another transformation called Comparison that works in a similar way, in that we can choose different strategies and distance measures, but it compares the columns in a record instead of different records. This is just one example of what can be done in a few simple steps, but note that the different transformations that work identifying duplicates can be configured in different ways, as well as the key generator transformation.

For a complete description of the transformations, the different distance measures specifications and how to select the one that best suits your needs, you should refer to the INFA transformation reference. Among these transformations there is another interesting one called Identity Match. The advantage of this transformation it that it offers the ability to match name and address data without the need for standardization prior to the matching. The identity match transformation performs matching operations on input data at an identity level; an identity is a set of columns providing name and address information for a person or organization. The transformation treats one or more input columns as a defined identity and performs a matching analysis between the identities it locates in the input data.

2. Data Consolidation

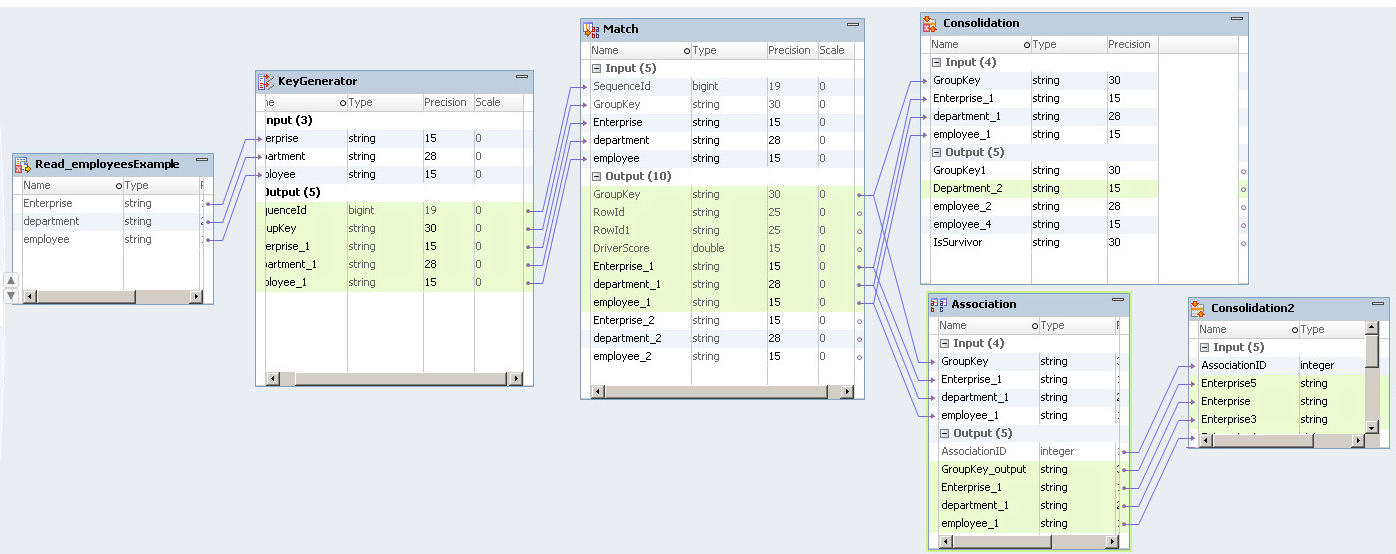

Data consolidation is the next step in data deduplication. Once we have found the matching records, we can automatically consolidate the records to obtain a master list of unique rows. To do this, we add the consolidation transformation to the mapping we had. The consolidation transformation can be configured in different ways, but basically it will group the records selecting one key column, so it will keep one record per group depending on the strategy we have selected. By default it will create an output port called IsSurvivor that shows if the record is the master or not. In our example we are going to add the consolidation transformation and drag and drop the output ports from the matching transformation into the consolidation transformation as shown below:

|

Figure 8: Mapping for record matching and data consolidation |

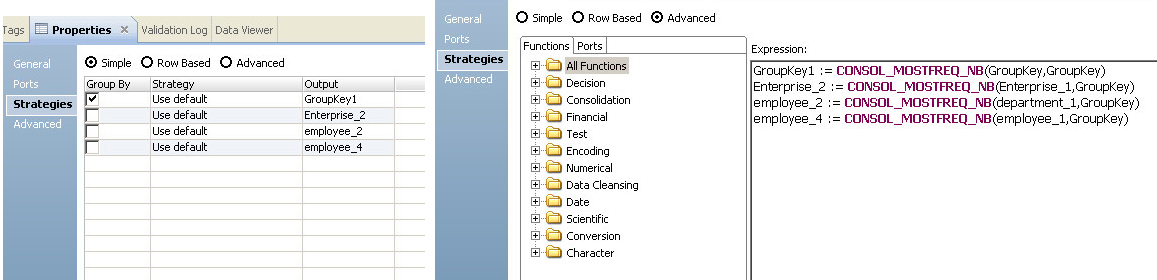

We have to be careful when selecting the group field, as it will determine the output of the transformation. In this case, we are going to configure a simple strategy, grouping by groupkey1 and with a most frequent non-blank strategy in every column. We can add more complex or customized strategies by selecting advance and writing out code; for instance, we may want to create a custom strategy that could depend on the matching score generated by the previous matching transformation.

|

Figure 9: Configuring the grouping strategy for the data consolidation |

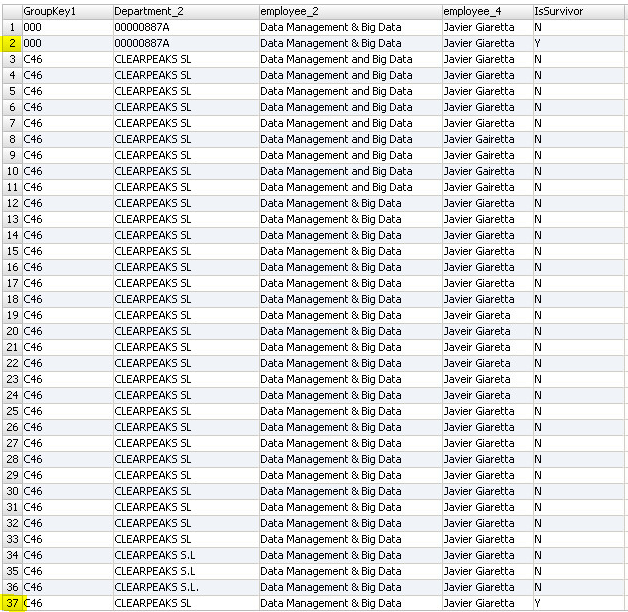

If we preview the results of the transformation we can see the survivor records:

|

Figure 10: Results preview of the consolidation process. The highlighted rows are selected as the surviving records of the de-duplication. |

These will be our master records in this example. From the above result we might think that there are two survival records when there should be only one, as the department and the employee are the same. This happens because we are consolidating the data grouping by the cluster id, so we still have to find the duplicates by employee and department. In order to implement multiple grouping and multiple match criteria in a single mapping we can use the association transformation. With this transformation we can consolidate the data as if they belonged to the same cluster. We’re going to configure the association transformation to associate the department and the employee name, make a second consolidation (consolidation2), and then check the results.

|

Figure 11: Final mapping for record matching and data consolidation using multiple startegies and match criteria. |

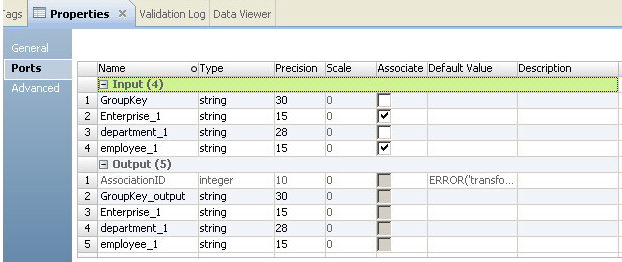

The figure below shows the properties tab for the association transformation:

|

Figure 12: Configuration of the Association transformation |

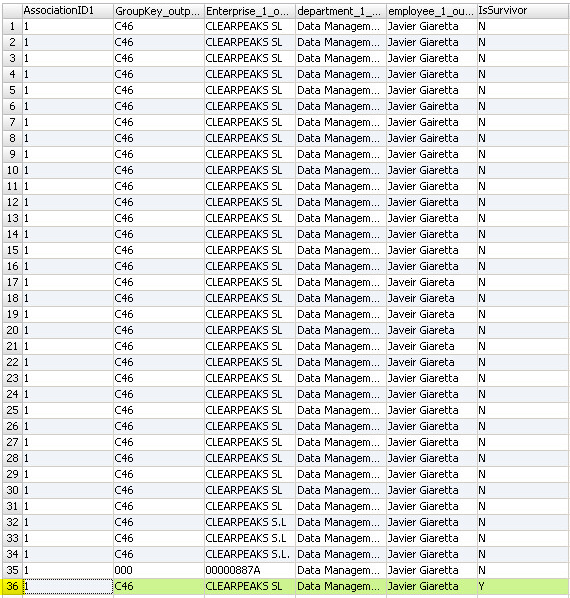

With this change, the association id will be common for both output clusters coming from the match transformation. If we check the results now we can see that we have only one survivor record:

|

Figure 13: Final results of the matching and de-duplication process. We can see only one surviving record for each set of duplicate records. |

Conclusion

In this example we have seen how we can consolidate data automatically with Informatica DQ. It is important to note that automatic does not mean intelligent, as we have to set up the transformations properly, carefully selecting the measures of distance whilst paying special attention to the data grouping. This article concludes our series about Data Quality with Informatica.

If you would like to know more about the Data Quality Services we offer click here!